Content warning: this investigation addresses sensitive issues related to the sexualization of minors and child pornography.

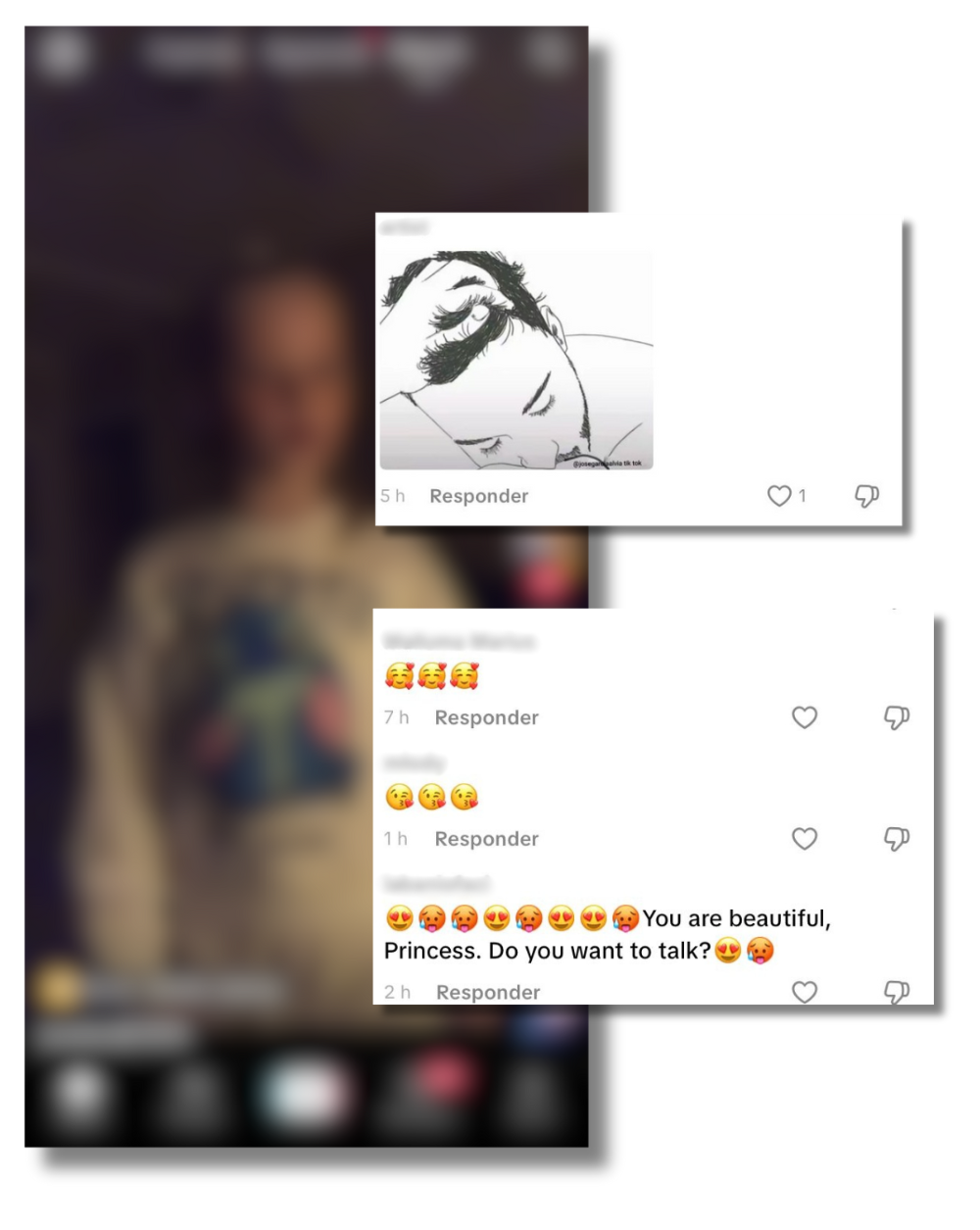

Pepe opens TikTok and the first thing he sees is a video of a minor in uniform preparing food with her feet. The video is labeled “generated with AI” by the platform. He swipes up and a real teenager appears, dancing. He scrolls again and now there are two young girls singing. In the comments, he sees dozens of sexually explicit messages directed at them (such as “they have to learn sooner or later” or “everything fits now”); other comments promote Telegram accounts where child pornography is sold.

This is what Pepe's “For You” page looks like, the account we used for this investigation.

Pepe is not real; he is the profile we used to delve into accounts that publish content sexualizing minors on TikTok to make money. Our experience has been as follows: TikTok's algorithm recommended videos of this type in the ‘For You’ feed and search tab, putting us in a loop of posts that sexualize girls and teenagers, and putting us just a few clicks away from child pornography, both real and AI-generated. This is despite the fact that the online platform has to comply with the DSA's obligations on the protection of minors and remove illegal content if it knows it is circulating, and that its own rules prohibit the sexualization of minors.

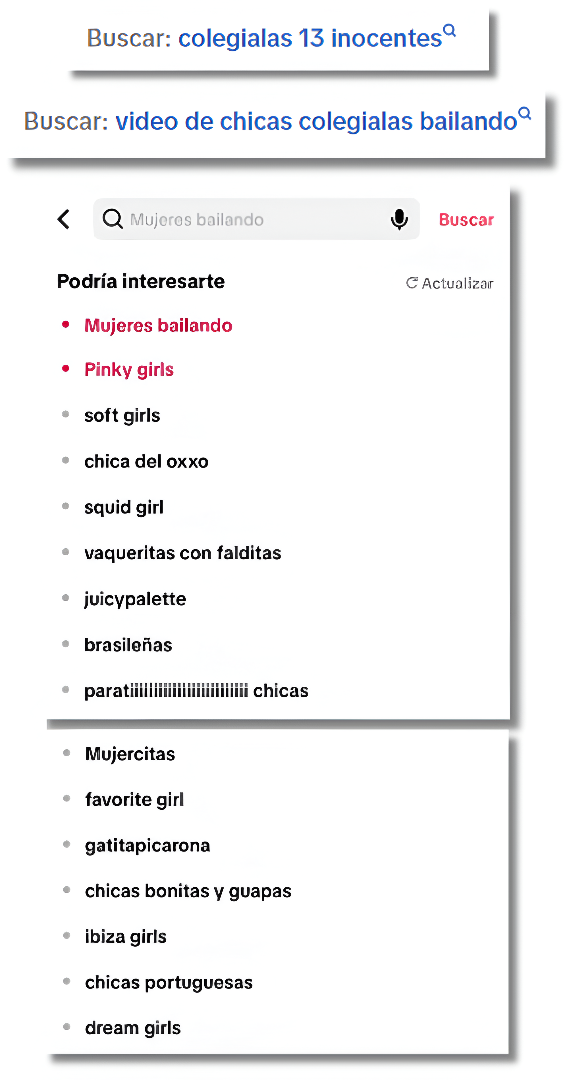

“13 innocent schoolgirls”: the searches recommended to us by TikTok after offering us videos that sexualize real minors and those created with AI, with comments from users offering child pornography in the “For You” feed

TikTok decides what to show its users thanks to its recommendation algorithm, which is based on their tastes and habits to suggest content. This also applies to Pepe, who wants to see videos of minors in sexual poses. We verified this with the account we used for this analysis: Pepe's “For You” page immersed him in a spiral of videos that sexualized minors, showing us over and over again videos of real and AI-generated girls and teenagers full of sexually explicit comments.

On several occasions, the algorithm showed Pepe profiles that appeared to belong to real minors. This was the case with a video recommended by the algorithm of a real little girl dancing, which has more than 5,000 views, where there are comments with images of oral sex scenes and others telling the minor that she is beautiful and that they want to talk to her.

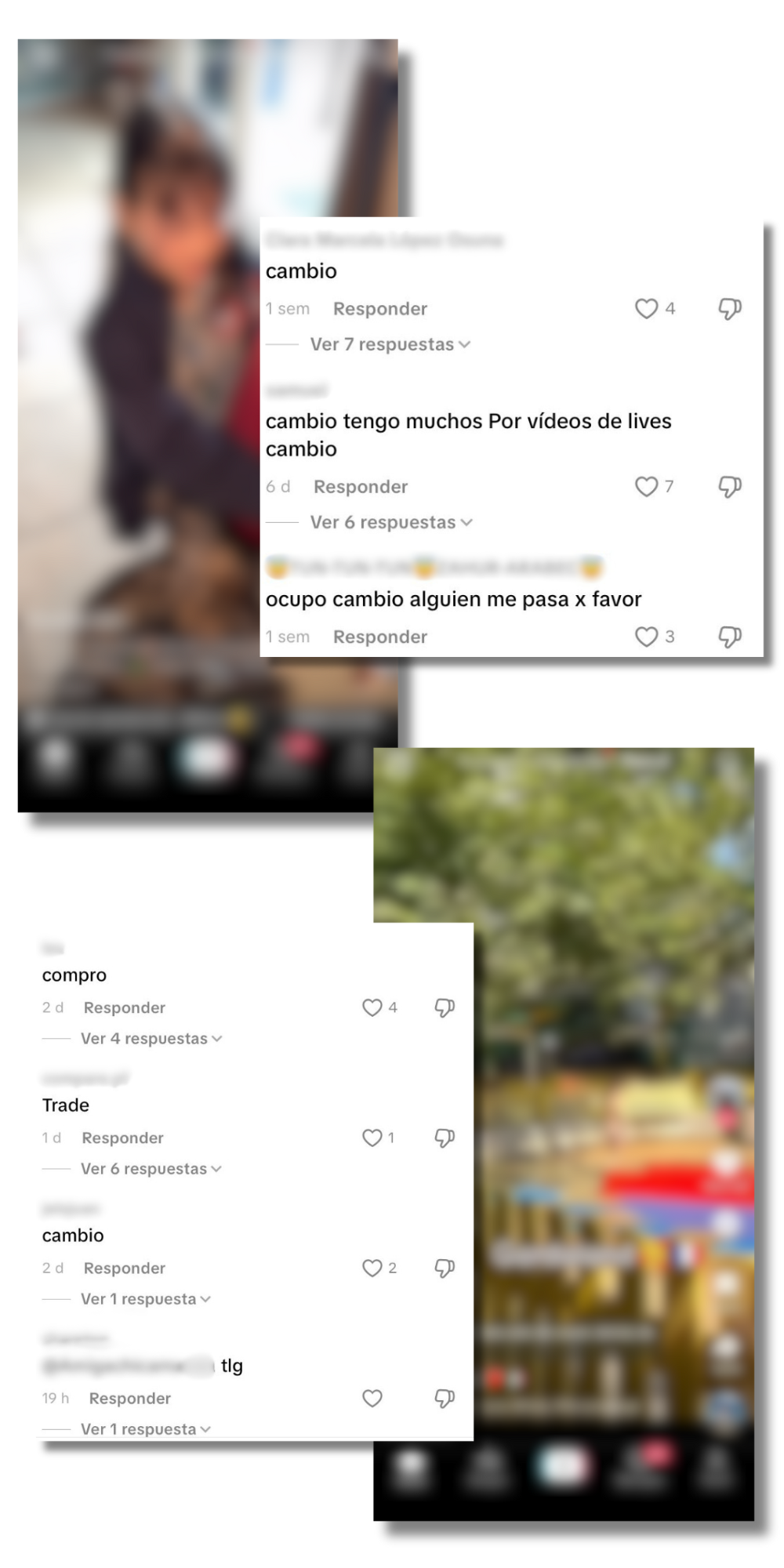

Another pattern we detected in Pepe's algorithm is that most of the recommended videos, both those featuring real minors and those created with AI, had comments promoting Telegram accounts that sell or exchange real child pornography. This is the case with a post showing a girl playing with Christmas decorations, which has 1.4 million views, where we found several messages saying “exchange,” a term used by these accounts to refer to the exchange of child pornography that often takes place via Telegram. The same is true of another video showing a young girl in a swimsuit playing in a park with more than 655,000 views, where we found more comments of this type (“exchange,” “exchange and sell,” or “tlg [Telegram],” for example). Thus, TikTok's own algorithm puts Pepe, who could be a pedophile, just a few clicks away from accessing child pornography.

It also suggested searches such as “13 innocent schoolgirls,” “schoolgirls dancing,” “young women,” and “pretty and beautiful girls.” Among other things, TikTok's “For You” feed recommended that we subscribe to the news feed (a channel for creators to communicate with their followers) of an account with the description “here you will find the most beautiful and sexy schoolgirls.” These recommendations, according to the platform, are based on your interactions, content information, and user information.

Mamen Bueno, a health psychologist, psychotherapist, and badass who has lent us her superpowers, warns that these suggestions can cause “[adults] to enter a spiral of content that normalizes the sexualization of children and can modulate the perception of risk, creating a sense of ‘accessibility’ and availability of material.” The expert points out that “these types of loops can reinforce cognitive biases and lower the moral barriers of some vulnerable users.” In this regard, Bueno specified that “the platform itself is inadvertently promoting the exposure of minors to potentially harmful views. This increases the risk of recruitment, grooming, or harassment.”

The Digital Services Act requires TikTok to assess and reduce the risks that the platform may pose to minors and their rights.

Under the Digital Services Act, TikTok must assess whether its features, such as its recommendation algorithms and content moderation systems, may pose risks that have a negative impact on minors and their rights (Article 34). It also has an obligation to take effective measures to reduce those risks (Article 35).

“If the design of the recommendation algorithm and the discovery system help these types of accounts grow and monetize, the platform is failing in its duty to assess and reduce systemic risks to minors,” Marcos Judel, a lawyer specializing in data protection, AI, and digital law, tells Maldita.es. What's more, the European Commission and the Digital Services Board have already warned about this specific risk of generative AI and the manipulation of services in relation to the protection of minors.

TikTok's subscription system also contributes to the spread of these videos: 13 of the accounts analyzed by Maldita.es use it to charge an average of four euros for exclusive content and additional features. They “share” up to 50% of this revenue with TikTok. Regarding these profits, both for users and the platform, Judel emphasizes that “under the DSA, monetizing content that violates the fundamental rights of minors is incompatible with security obligations by design.”

The platform must also remove illegal content if it is aware that it is circulating

Regarding its obligations to remove content, the platform “is only liable to the extent that it is aware of the illegal nature of the content,” Rahul Uttamchandani, a lawyer specializing in technology and privacy, explains to Maldita.es. “If the platform has actual knowledge, either through its detection system, user reports, or external investigations, and does not act diligently, the problem ceases to be solely that of the user and becomes also that of the platform, which may be penalized,” warns Judel.

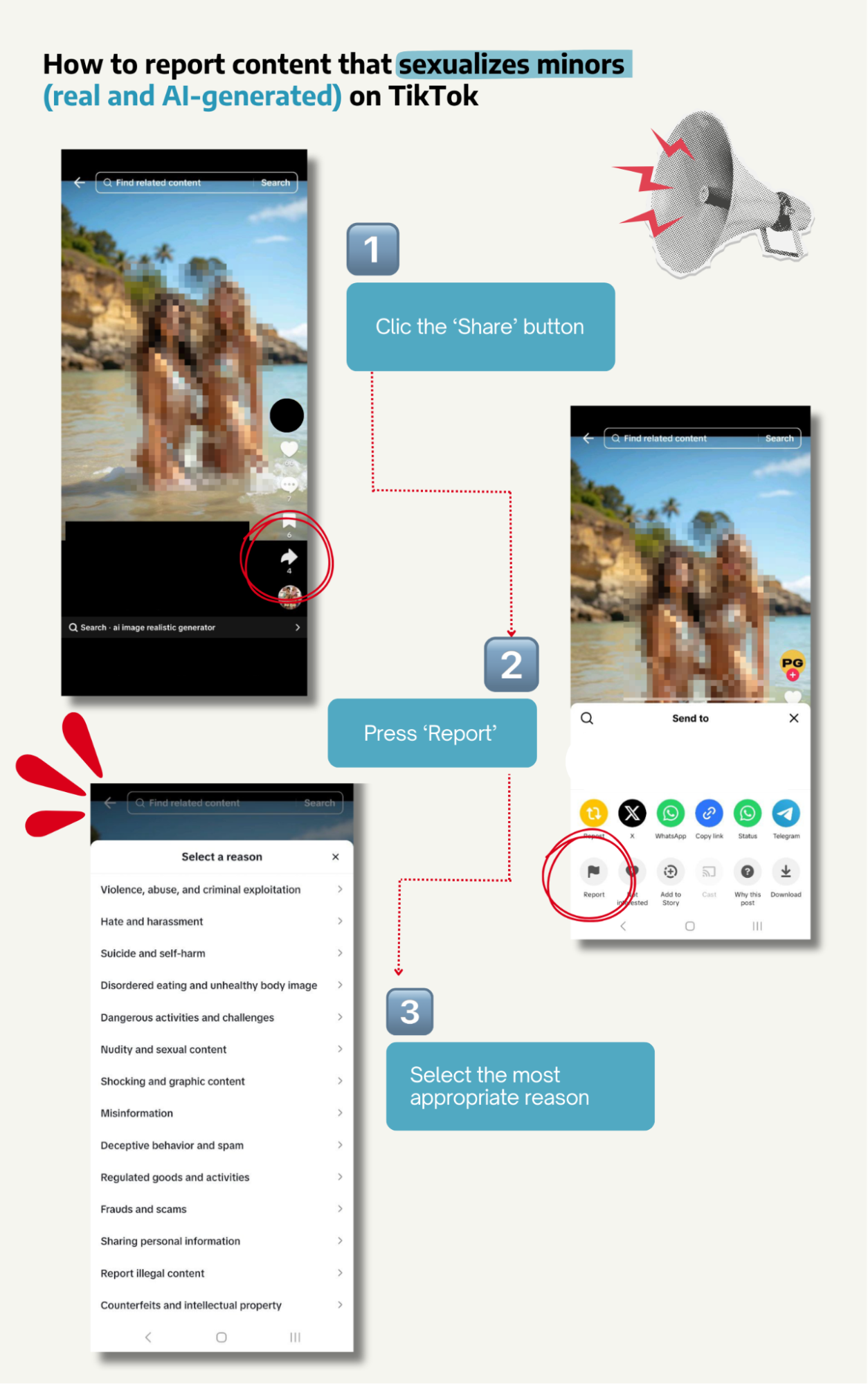

In the case of accounts that collect videos of real minors, as well as those that publish content created with AI, we can report these posts to alert TikTok that they are circulating.

Maldita.es alerted TikTok to the existence of accounts that publish videos sexualizing minors created with AI through its reporting mechanisms:

We selected 15 profiles that shared sexual content of minors generated with AI and reported them for violating the platform's internal policies.

TikTok specifies that accounts “focused on AI images of young people in adult attire, or sexualized poses or facial expressions” are prohibited. However, it considered that there was no violation in any of the accounts except for one, to which it applied restrictions that did not prevent further access to its videos. TikTok maintained the same position after appealing the decision.

In addition, we did the same with 60 specific videos from these accounts: 72 hours after being reported, TikTok had removed only 7 videos and disabled the recommendation through “For You” on 11.

The obligations also apply to comments on posts on these TikTok profiles that redirected users to Telegram accounts where child pornography is sold and exchanged. Judel explains that TikTok must comply with three obligations: "Remove them diligently as soon as it becomes aware of them; prevent them from being systematically linked or going viral; and strengthen automatic and manual filters. If it fails to do so, it begins to assume responsibility as a hosting provider that tolerates the dissemination of illegal content."

It does not matter whether the content is posted or commented on from abroad, but whether it is accessible from the European Union. If the European Commission finds that a platform has committed serious breaches of the DSA, fines of up to 6% of its global business can be imposed.

According to TikTok's Community Guidelines Compliance Report, between January and March 2025, more than 26% of the content removed by the platform for violating its “child safety and well-being” policy showed sexually suggestive images of minors or significantly exposed the bodies of minors, showing them naked or semi-naked. The report states that most of this content (more than 90%) was removed within a maximum period of 24 hours.

The maldita Mamen Bueno, health psychologist and psychotherapist, has contributed her superpowers to this article.

Mamen Bueno is part of Superpoderosas, a project by Maldita.es that seeks to increase the presence of women scientists and experts in public discourse through collaboration in the fight against misinformation.