Content warning: this investigation addresses sensitive topics related to the sexualization of minors and child pornography.

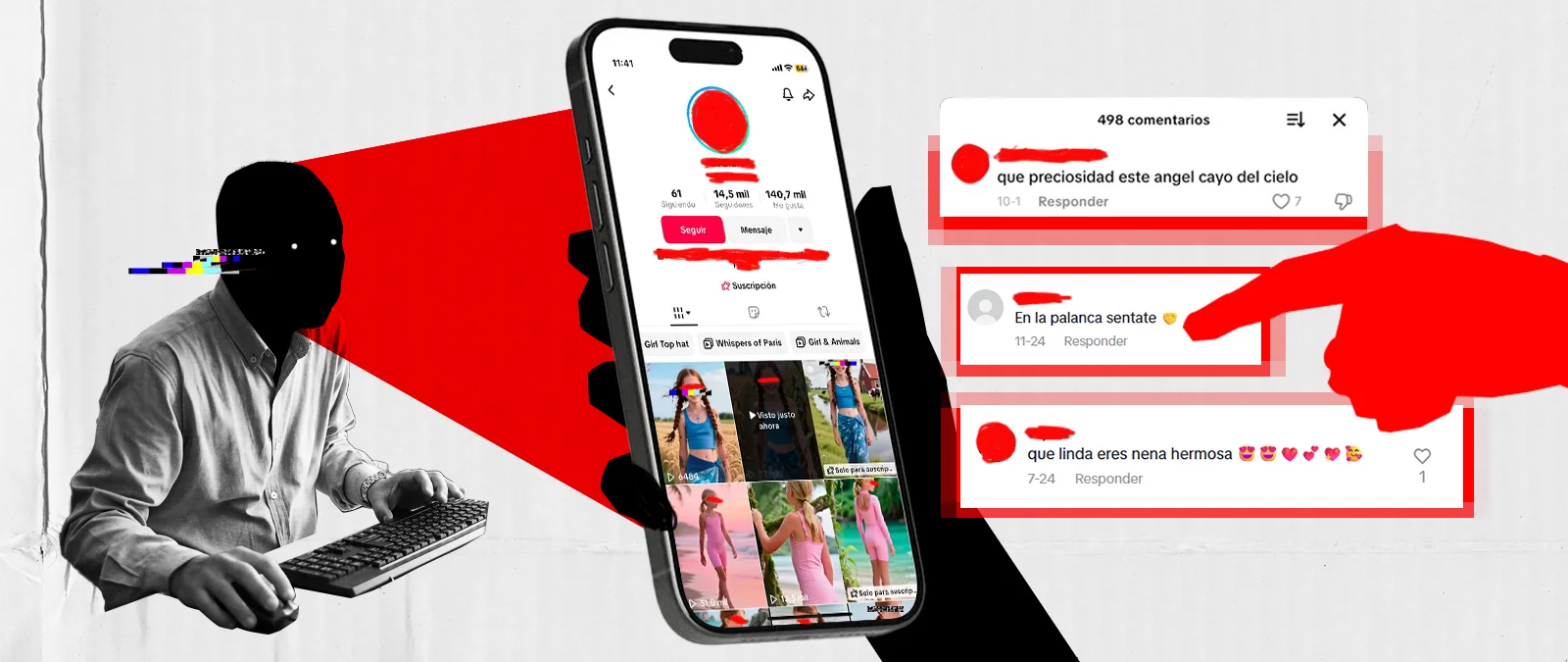

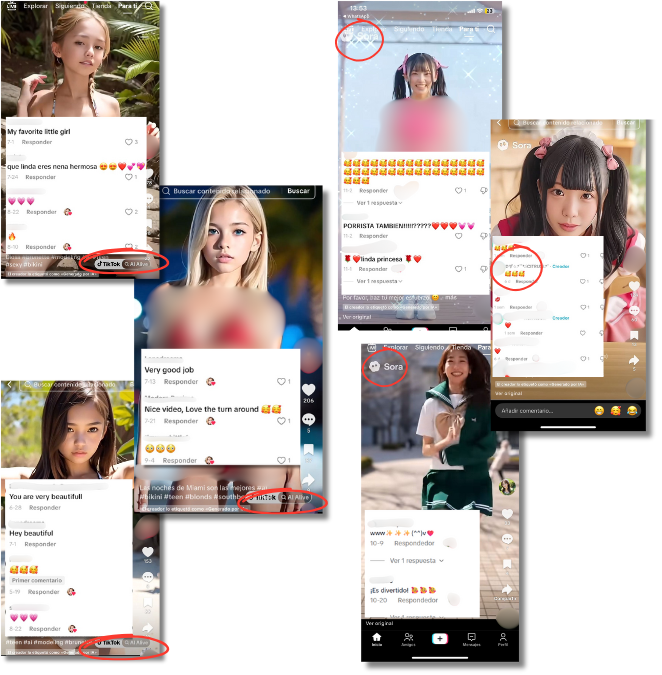

“The hottest girls generated here,” “AI-created videos of teenage girls,” “small modeling videos.” This is how some of these TikTok accounts that use artificial intelligence to sexualize girls and teenagers present themselves. They have built an entire community around it: just 20 of these accounts (there are more) have accumulated 553,180 followers and more than 5.7 million likes. Their posts are full of comments with sexual suggestions towards the alleged minors from users who appear to be adults; there are also those who promote Telegram accounts where they sell child pornography of real minors.

These accounts profit by selling sexually explicit videos and images of minors created with AI through TikTok's subscription system (a portion of the profits goes to the platform) and also outside the platform. The girls in the videos are not real, but the effects of this content are: experts consulted by Maldita.es warn that these videos, which can be prosecuted as child pornography, contribute to normalizing the sexualization of children and reinforce harmful consumption patterns for minors.

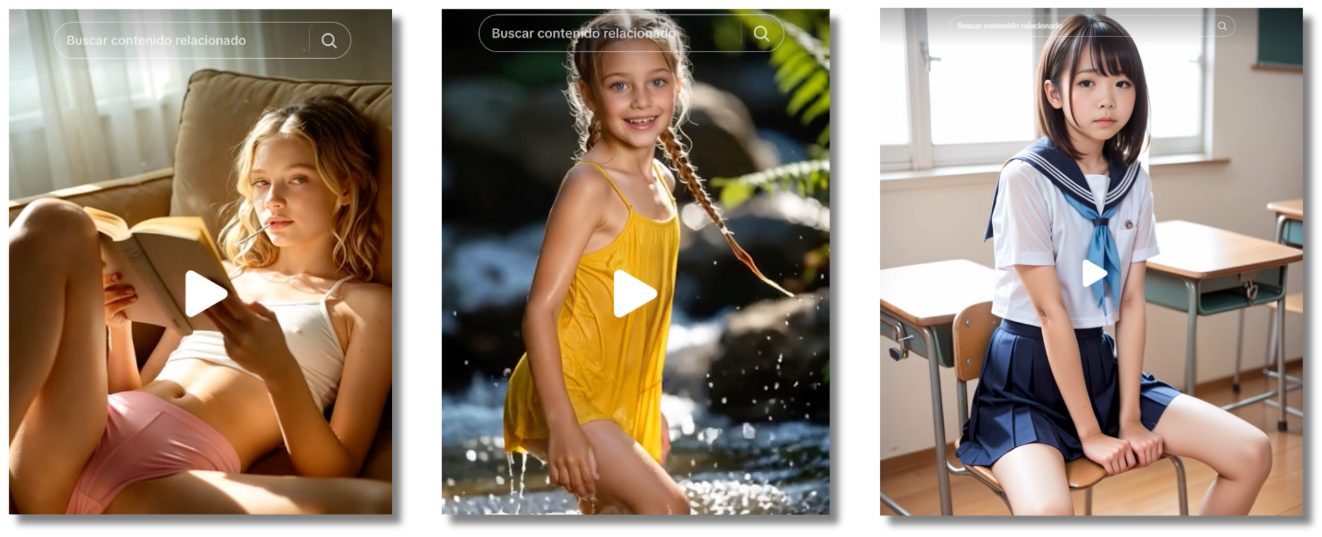

Girls and teenagers in bikinis, uniforms, or tight clothing: the AI-generated videos posted by these accounts are filled with sexual comments about minors

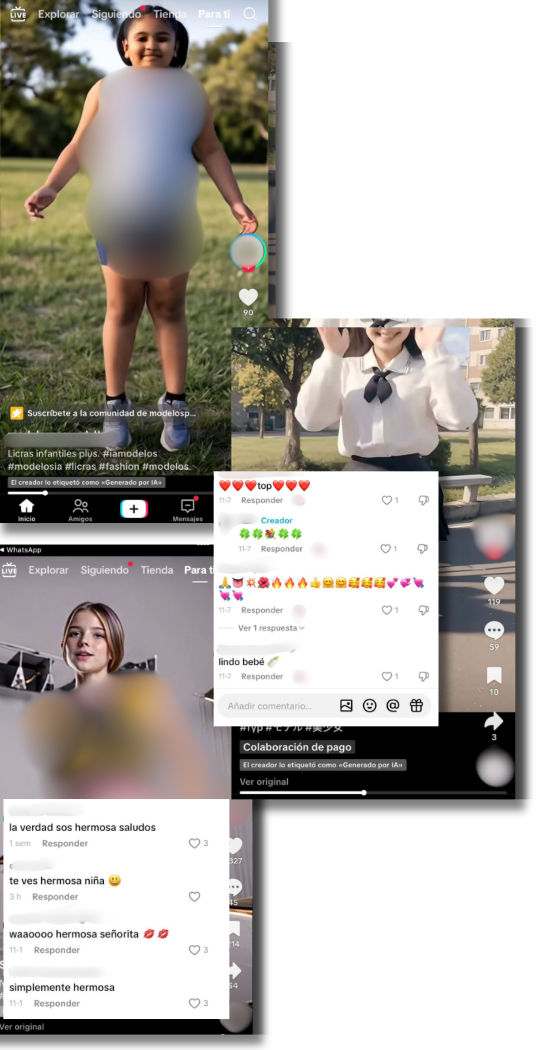

Sexualization of minors on demand, with the help of generative AI. This is what is offered in the more than 5,200 videos posted by the accounts analyzed by Maldita.es. The protagonists are usually young and teenage girls, but the situations in which they are portrayed vary. The common factor: the posts are filled with comments in which users (many with profile photos of adult men) sexualize them, even with sexually explicit messages, or leave them heart and fire emojis.

There are dozens of AI-generated media showing girls and teenagers in bikinis or tight clothing: the videos focus on showing the breasts and legs of the alleged minors. There are also recurrent videos of minors jumping, either wearing sportswear that highlights their genitals and nipples, or school uniforms.

Others may seem more innocent at first glance, as the minors appear to be fully clothed. But they are not, as comments in different languages reveal. There are videos showing girls sticking out their tongues or eating ice cream with user comments saying “all the way” or “they have to learn sooner or later.” One of the accounts analyzed has posted several AI-generated videos of teenagers preparing food with their feet: in one of them, with over 643,000 views on TikTok, we can find comments saying “spread your legs” or “eat and suck your fingers.”

There are videos created with AI showing girls in school uniforms yawning or waving their hands: in the comments, users leave images of the alleged sexual acts they represent, such as oral sex or masturbation. We also found a video set in a high school where several girls, apparently unconscious, receive mouth-to-mouth resuscitation from their classmates and teachers, who touch their breasts and other parts of their bodies.

Several of the accounts analyzed by Maldita.es redirected us, either through the link in their bios or by direct message, to websites where sexual videos and images of minors created with AI are sold. In addition, six of the 20 profiles that publish this AI-generated content sexualizing minors use TikTok's subscription system, which allows them to charge for exclusive content and additional features. Subscriptions cost an average of €3.87. A portion of these earnings goes to the social network (50% after the iOS or Google payment platform commission of 15-30%, as explained on their website).

TikTok's generative AI and Open AI's Sora, two of the tools used to create these sexualized videos of minors

All the accounts analyzed by Maldita.es use TikTok's “AI-generated content” label or a hashtag or comment revealing the synthetic origin of their content. However, most of the videos analyzed do not have visible watermarks revealing which tools were used to create them.

There are some exceptions. One of the profiles analyzed posted several videos of girls and teenagers in bikinis with the TikTok AI Alive watermark, a feature that uses artificial intelligence to “transform static photos into dynamic and immersive videos,” according to TikTok. Another account (which redirects to X, where it posts links to a pornography website) has several videos of cheerleaders and girls in school and maid uniforms jumping, which feature the watermark of Sora, OpenAI's video creation tool.

After reporting 15 of these accounts for violating TikTok's policies, all of them are still available.

The platform's community guidelines state that “accounts focused on AI images of youth in clothing suited for adults, or sexualized poses or facial expressions” are not allowed. It also says the same about content created with artificial intelligence that is “sexualized, fetishized, or victimizing depiction.” However, at Maldita.es we reported a selection of 15 profiles using the channels provided by TikTok for this purpose: 72 hours later, all the reported accounts were still available on the platform. TikTok considered that there was no violation of its policies in the vast majority of cases, except for one account, which was subject to temporary restrictions that did not prevent access to its content.

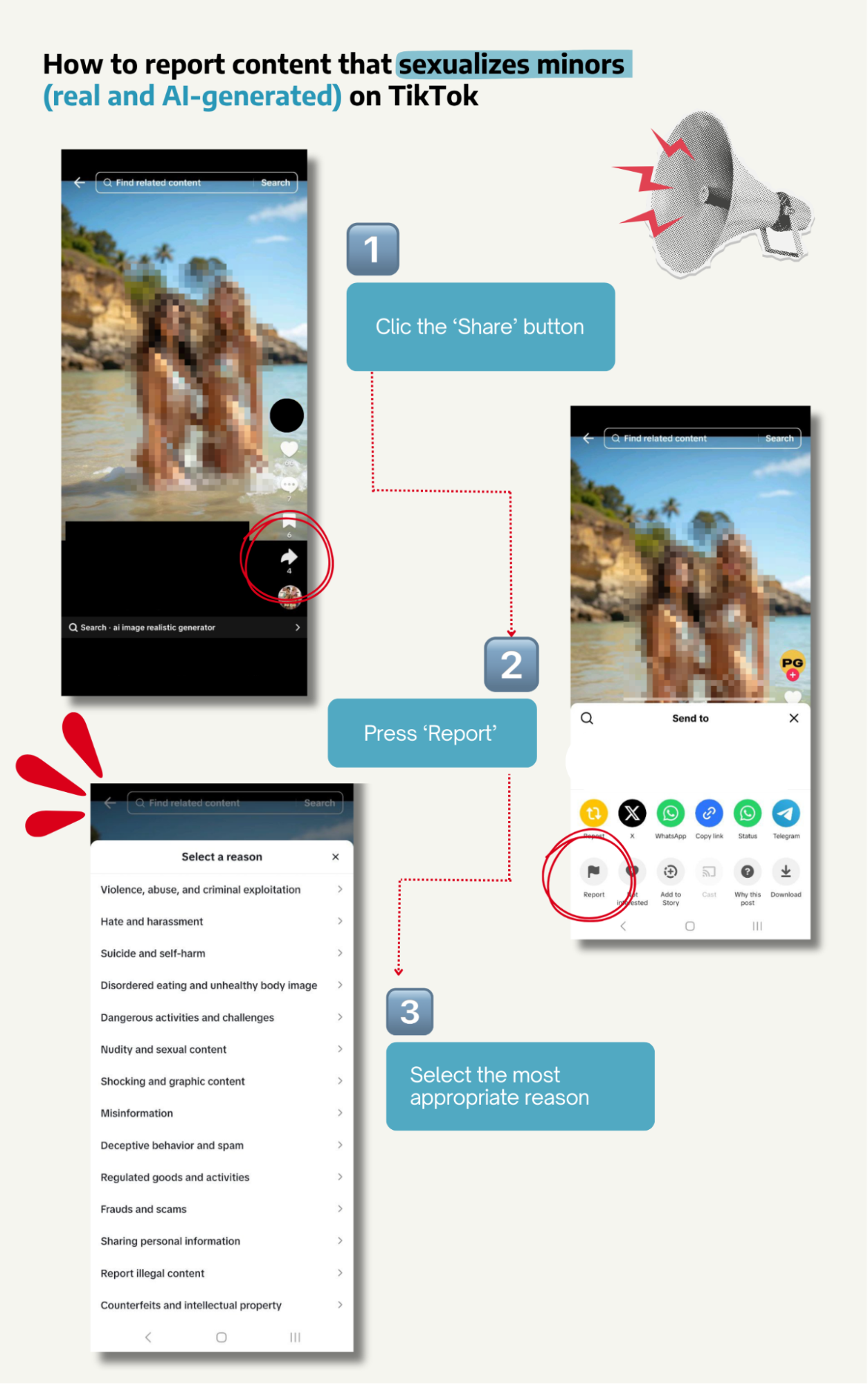

In this regard, online platforms such as TikTok are only legally obliged to remove a video if they have “effective knowledge” that it is illegal, according to the Digital Services Act (DSA). This was explained to Maldita.es by Rahul Uttamchandani, a lawyer specializing in technology and privacy. As users, we can report this type of content so that the platforms are notified. To do so on TikTok, we must click on the “share” button, press “report,” and select the corresponding reason.

In Spain, sexual content created with AI involving minors can be considered child pornography and punished with prison sentences

Sexual content created with AI is not classified as a crime in Spain (although there are plans to do so); but when it depicts minors, it can be prosecuted thanks to the broadening of the concept of child pornography. According to Marcos Judel, a lawyer specializing in data protection, AI, and digital law, “we have to be careful because not every image of a teenager in a bikini is child pornography, but an image hyper-focused on sexual parts of the body generated with sexual intent can be.”

Article 189 of the Spanish Penal Code punishes the production, sale, distribution, exhibition, and facilitation of child pornography with sentences of one to five years (and up to nine in more serious cases), even if the material originates abroad. “As for buyers, the purchase or possession of this type of material is also punishable by one to five years in prison,” Uttamchandani points out.

When asked about the number of cases in Spain in which AI has been used to create child pornography, the Attorney General's Office responded to Maldita.es that it does not have data “on this very specific type of crime affecting minors, as the statistics do not include the means used to commit crimes.” In September 2025, we compiled at least 14 cases of AI-generated sexual content involving minors in Spain that took place between 2023 and 2025.

The girls are not real, but the effects of these videos are: psychologists say they can normalize the sexualization of children and reinforce harmful consumption patterns

“Even though they do not represent real children, this content is deeply problematic,” warns Mamen Bueno, a health psychologist, psychotherapist, and Maldita contributor who has lent us her superpowers. The expert points out that videos such as those analyzed by Maldita.es can contribute to “normalizing child sexualization and can reinforce consumption patterns in people with harmful interests toward minors.”

“The problem lies in perpetuating an erotic and sexual image of young bodies,” explained Silvia Catalán, a psychologist specializing in sexuality and abuse. This has tangible consequences for minors: “The dissemination of sexualized content featuring ‘fictional minors’ makes it socially difficult to distinguish between what is permissible and what is ethically unacceptable, fueling a demand that can later be transferred to real minors,” said Bueno. We see this in users who promote their Telegram accounts where they sell child pornography in the comments.

Several academic studies have analyzed the effects of sexualization: for example, a 2019 study by the Université Libre de Bruxelles concluded that sexualization leads to a process of dehumanization, in which people depicted in revealing clothing or suggestive poses are often considered less competent, moral, and warm. In the case of the sexualization of minors, this content can affect their mental health and lead to eating disorders, low self-esteem, and depression, according to a report by the American Psychological Association (APA).

TikTok's own algorithm increasingly immerses users in this type of content, recommending similar videos in its “For You” section and search engine, and even showing them profiles that appear to belong to real minors. "The platform itself is inadvertently promoting the exposure of minors to potentially harmful views. This increases the risk of recruitment, grooming, or harassment," concludes Bueno.

The following malditas have contributed their superpowers to this article: Mamen Bueno, health psychologist and psychotherapist, and Silvia Catalán, psychologist specializing in sexuality.

Mamen Bueno and Silvia Catalán are part of Superpoderosas, a project by Maldita.es that seeks to increase the presence of female scientists and experts in public discourse through collaboration in the fight against misinformation.