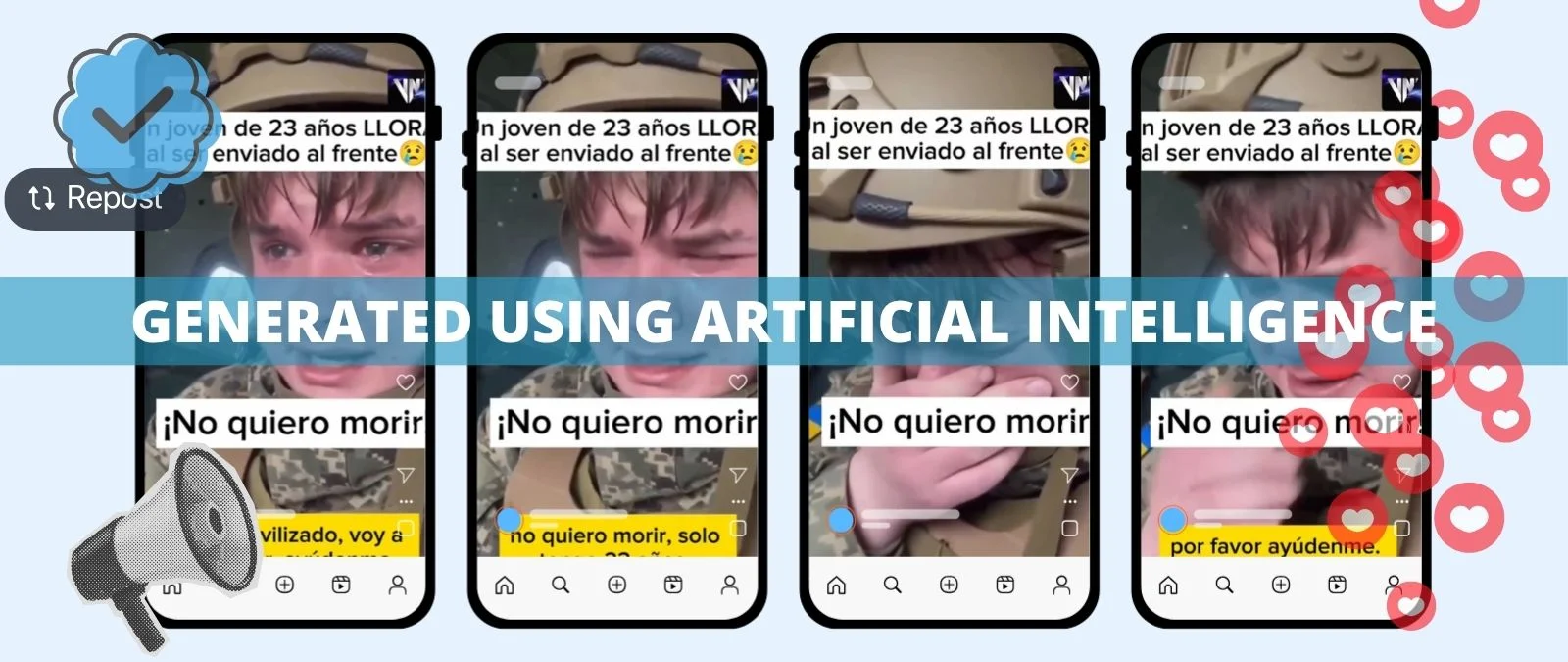

A supposed Ukrainian soldier who claims to be 23 years old begs, in tears, for help to avoid going to the front. “I don't want to die,” says the young man dressed in a military uniform with a Ukrainian flag on his arm. This video has gone viral on social media alongside messages claiming that Zelensky's government is “forcing” citizens to fight for the country.

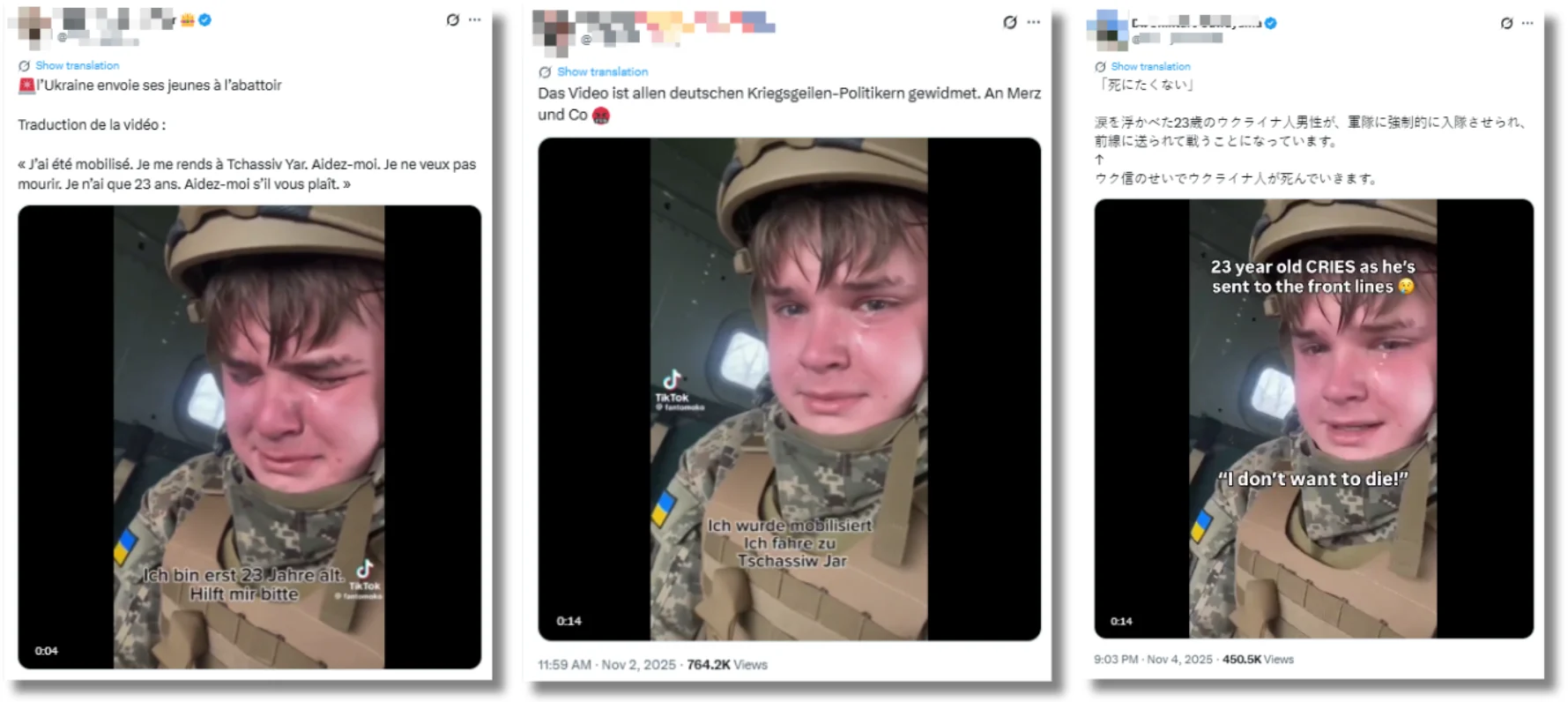

Only on Twitter (now X) has it been shared in at least 12 different languages besides Spanish and has gotten millions of views in just a few days. Most of the content analyzed by Maldita.es with this video does not have Community Notes, a system that allows contributors to add context to tweets they consider misleading.

Millions of views and content in at least 13 languages on Twitter

It is a video made with AI. The Italian fact-checking organization Open has analyzed it: the helmet does not match the one used by Ukrainian army soldiers, the name on the uniform is not written in Ukrainian (it is in Cyrillic), and the shape of the teeth is different. In addition, the TikTok profile where it was uploaded (now deleted) had more videos of this type, including one in which a soldier cries blood.

It is spreading on social media as if it were real. Only on X has it been shared in at least 13 different languages: German, Czech, Spanish, French, Dutch, Hungarian, English, Italian, Japanese, Portuguese, and Polish. The 25 contents that shared this disinformation (both the video and posts referring to it) have been viewed more than five million times and are still available on the platform since November 2, 2025, when the first ones were published.

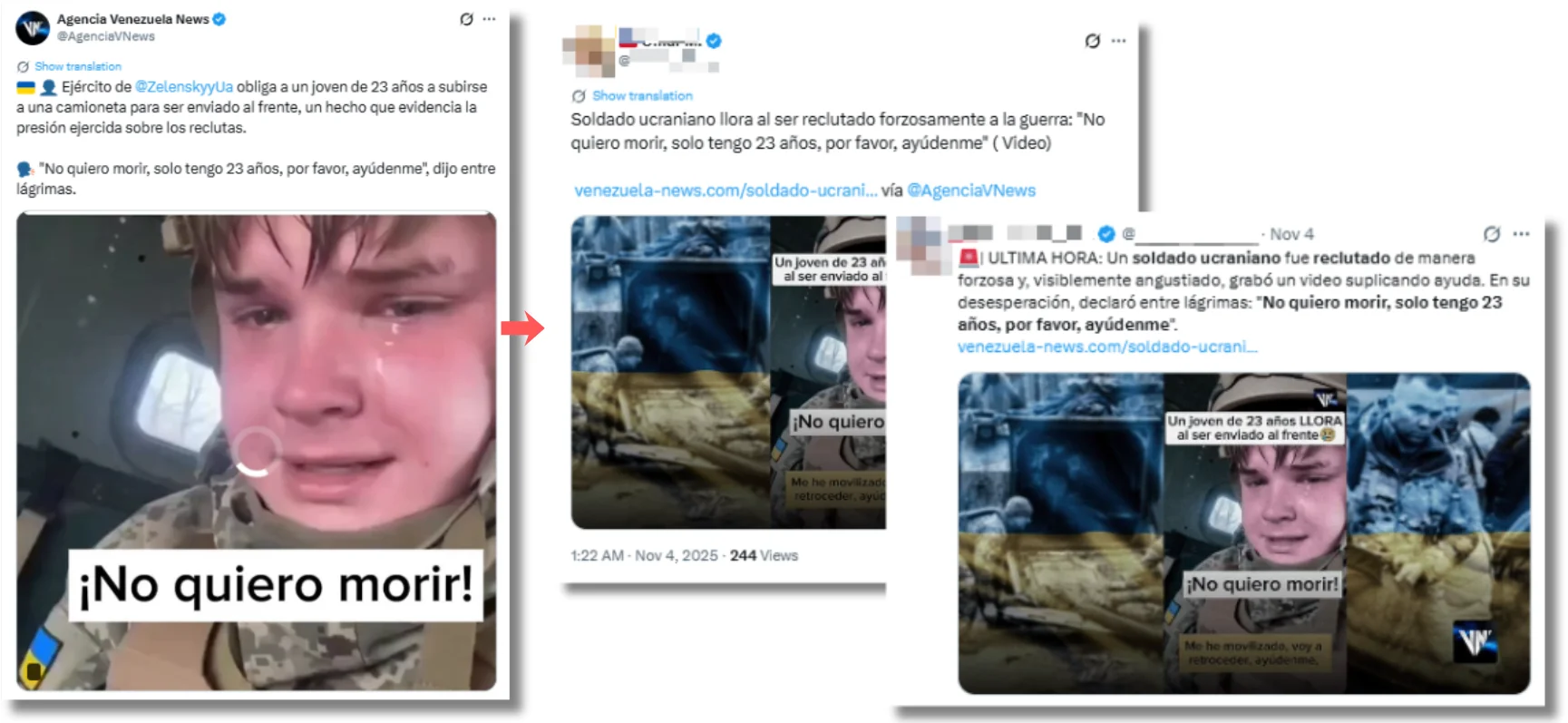

One of the most viewed posts is from Agencia Venezuela News, a Venezuelan webpage that regularly shares Russian disinformation, which has already accumulated more than 710,000 views in two days. It shares the video with the following message: “Zelensky's army forces a 23-year-old man to get into a truck to be sent to the front, a fact that shows the pressure exerted on recruits”. In fact, in August 2025, the Ukrainian government changed the regulation to allow men between the ages of 18 and 22 who were eligible for combat to leave the country (something that had been prohibited until then by martial law, which came into effect after the Russian invasion began more than three years ago). Furthermore, mandatory military service in Ukraine applies to people between the ages of 25 and 60, so he would be exempt from forced conscription.

The video published by Venezuela News contains its logo in the upper right corner and has been used as a source for other later publications in Spanish, especially those aimed at Latin American audiences.

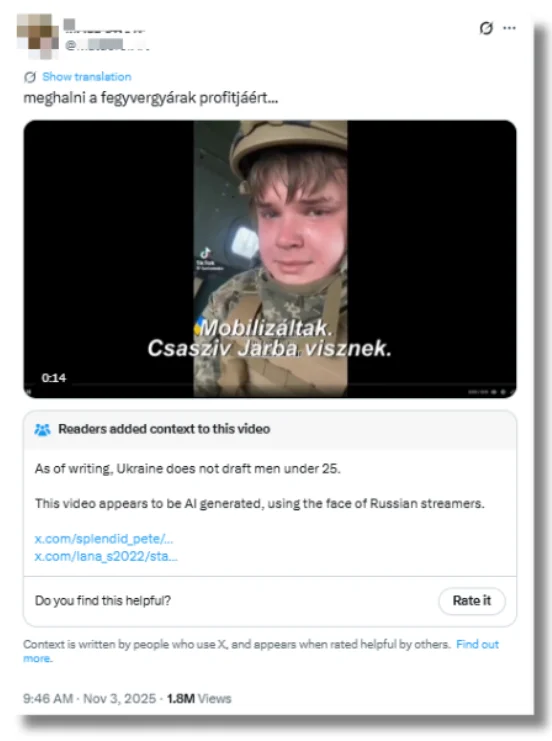

Of the videos analyzed by Maldita.es, the most viewed, with almost two million views, is from an account that publishes content in Hungarian and has translated the video's content into its language. This is one of the examples analyzed that does have visible Community Notes. Of the five posts with the greatest impact, four have context messages through this system on Musk's platform, which has proven to be insufficiently effective in other disinformation crises. In this case, around 75% (19 of the 25 tweets analyzed) do not have visible Community Notes as of November 5, 2025.

Among those who shared this AI-generated video, profiles with blue ticks predominate. The accounts that shared this misinformation and had the greatest impact have this label. Of the ten most viral pieces of content, seven have blue ticks, which are no longer synonymous with a profile being verified and who they say they are (now it can be obtained by paying and anyone can access it).

Grok continues to claim in several languages that it is a real video, hours after it was published that it was made with AI.

Despite having determined that this is a video generated with artificial intelligence, Grok (X's AI chatbot) continues to tell users who consult it that it is a real video. Maldita.es has identified responses along these lines in different languages. “Yes, the video appears to be authentic according to patterns of forced recruitment in Ukraine, with similar cases reported in 2025,” Grok said in Spanish to a user who asked if it could translate the content of the video posted by Venezuela News.

In another response, this time in Italian, he says that this video, which “shows the genuine desperation of a young Ukrainian who has been forcibly recruited,” “is not generated by artificial intelligence”. In another tweet in the same language, he said: “There is no evidence of manipulation or falsification from reliable sources”.

In another post, this time in English, he tells a user who asked if it was a real video or one generated by artificial intelligence: “No, the video shows a real Ukrainian soldier in uniform, visibly emotional with natural tears and expressions consistent with genuine distress”. According to him, there are some factors that lead him to believe that the video is not a deepfake: “This clip's amateur style, TikTok watermark, and unpolished speech”.

In total, Maldita.es has identified more than 15 responses to users in which it treats the video as real content or claims that it is. Grok (or any other AI chatbot) is not a reliable source for verifying images or checking if it has been generated with artificial intelligence. Chatbots can have an adulation bias that makes them agree with us on everything or even fall into the bias of equidistance and not take a position in their responses, even if there is sufficient evidence that an image is real (or not).