John J. Mearsheimer, political scientist and professor at the University of Chicago, claims that he barely speaks Spanish. However, there is a network on YouTube that has published more than 200 videos (most of which are still available) of the expert giving political analysis, in Spanish, on various current affairs topics: the Epstein case, Israel and Palestine, and the capture of Maduro by the United States, among others. They are taking advantage of the growing interest in political analysis to fill the platform with these videos, created with artificial intelligence (AI) and with millions of views in just a few days. One of the tools they use is HeyGen, an AI video generator that allows users to clone anyone's image.

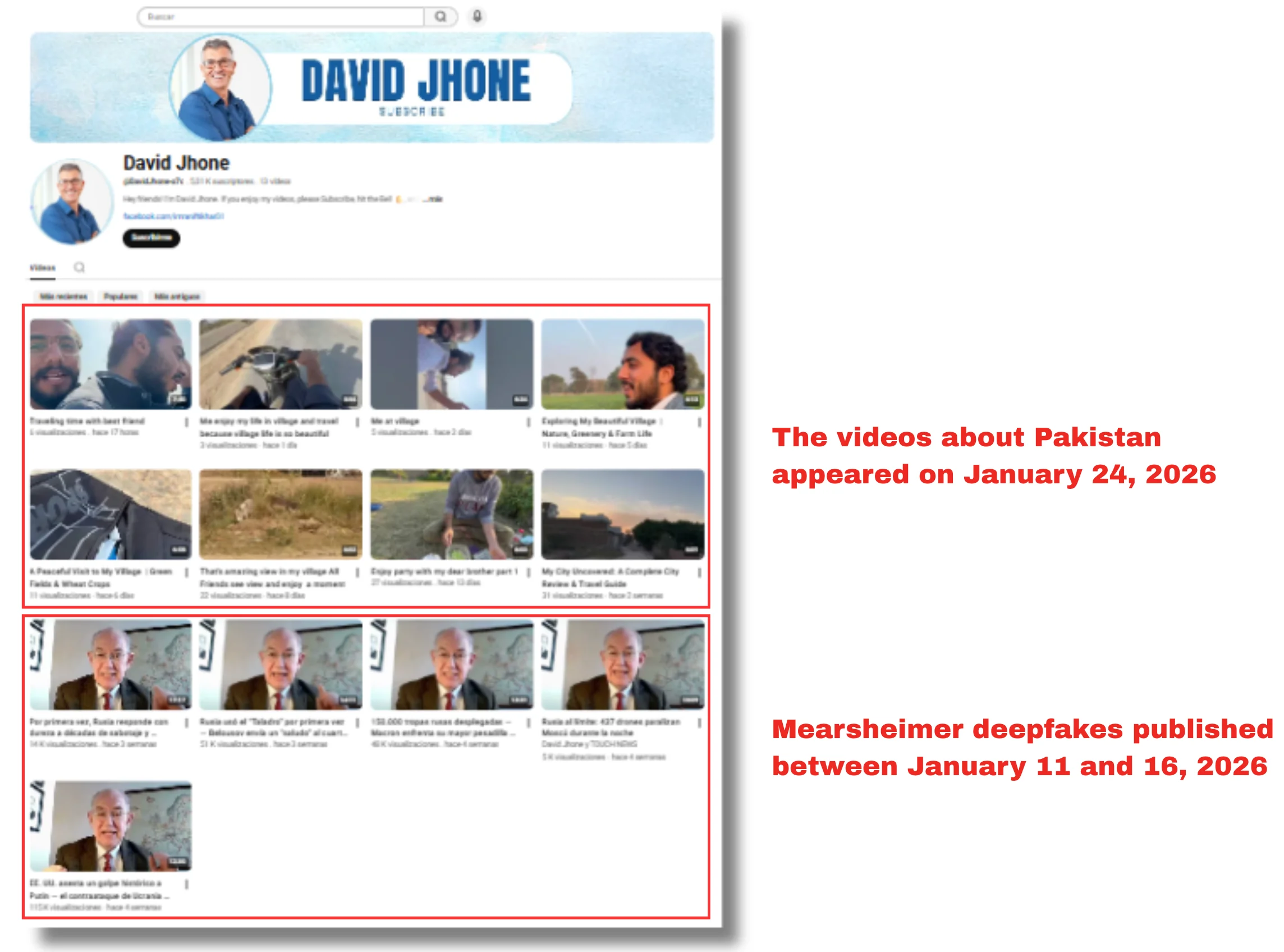

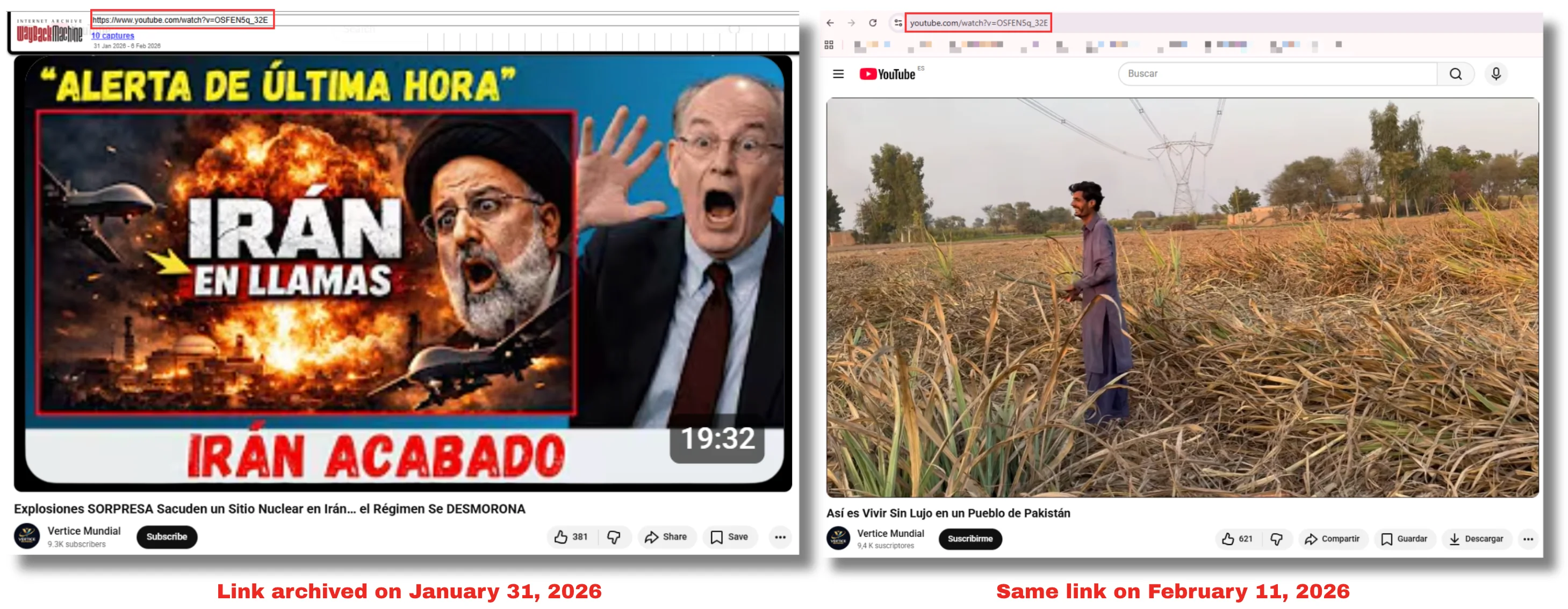

During the analysis, some of the 15 channels changed the type of content they publish. The expert's deepfakes (videos manipulated with AI to change or replace a person's face, body, or voice) have given way, without any clear explanation, to videos about Pakistan, the country where the network appears to be operating. “Life in a Village in Pakistan Left Me Speechless” and “Discovering a Hidden Village in Pakistan 🇵🇰” are two examples of short clips that have recently appeared on these channels. Some of these videos have been published on old URLs, taking advantage of their views and interactions.

Maldita.es found 15 channels created in three months that use John Mearsheimer's image in over 200 videos

“I have a significant problem with people posting fake AI videos of me on the internet”, Mearsheimer wrote on February 5, 2026, in a Substack post. Some are so realistic, he says, that even people close to him “do not realize they are watching a fake”. He explains to Maldita.es that he was aware of the circulation of deepfakes with his image in English and Chinese —he and his team detected a total of 43 channels— but he did not know about the existence of this content in Spanish. “In some of these videos, I say things that I never said and would never say,” adds Mearsheimer.

Maldita.es has found more than 200 videos with the political analyst's image (some of them with thousands of views) published by 15 different YouTube channels created between October 2025 and January 2026. These profiles (which have interacted with each other on several instances by sharing authorship on some videos) have a total of more than 170,000 subscribers as of the date of publication of this article.

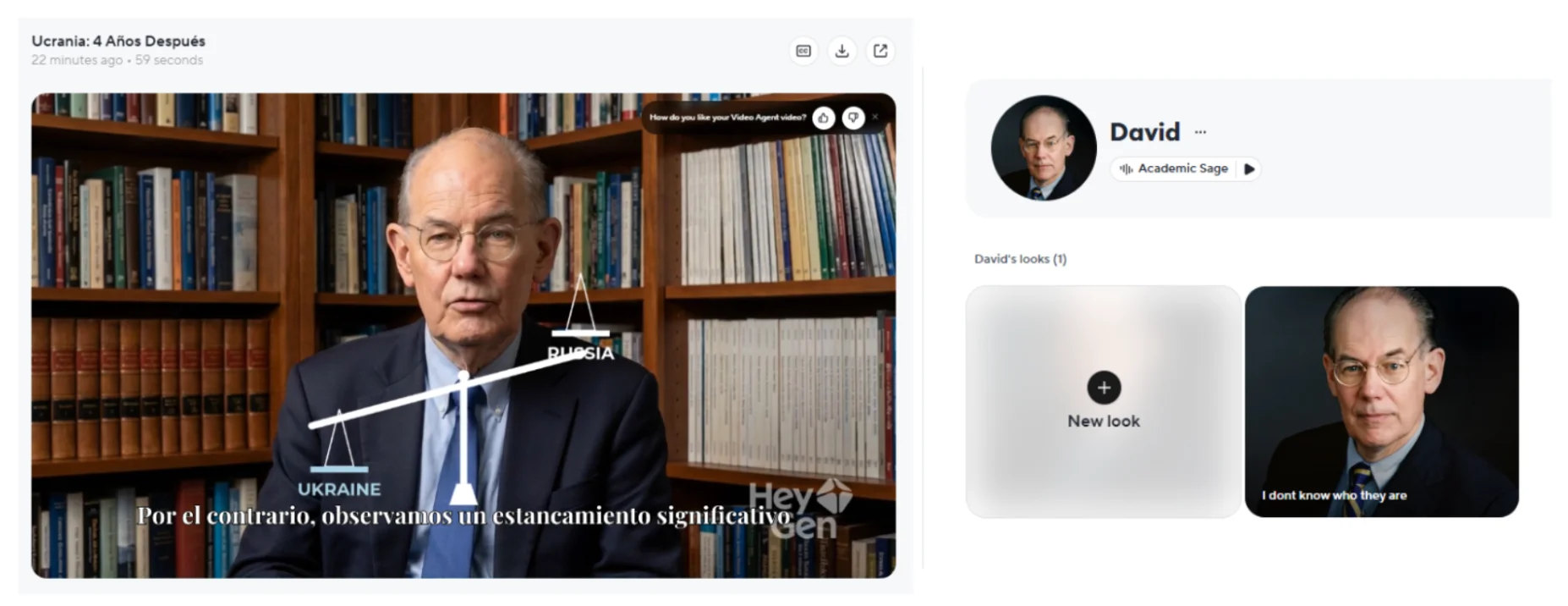

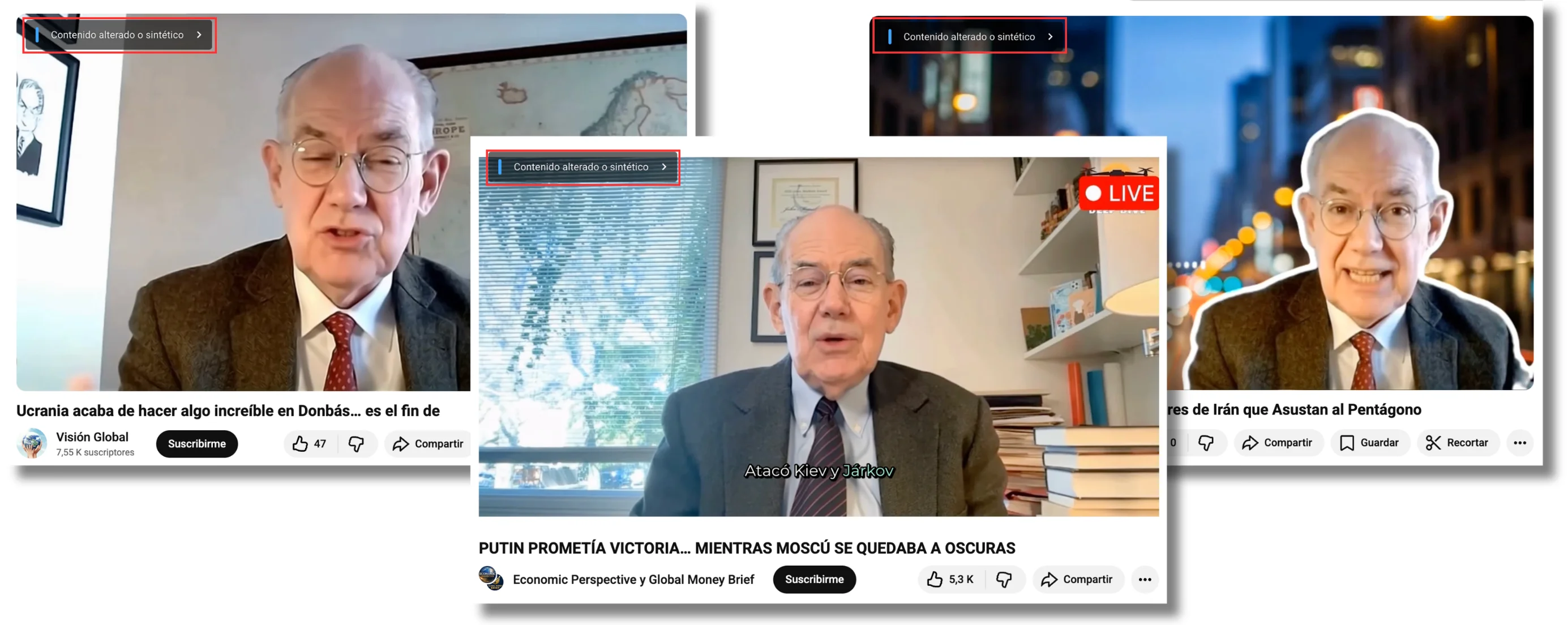

The content shows signs of having been created with Artificial Intelligence. In most videos, Mearsheimer appears wearing the same clothes. The images are shot from the same angle and have the same background, identical to one used by the analyst in some of his own videos. In addition, the voice in the videos is robotic (and not always the same) and the protagonist's movements are unnatural.

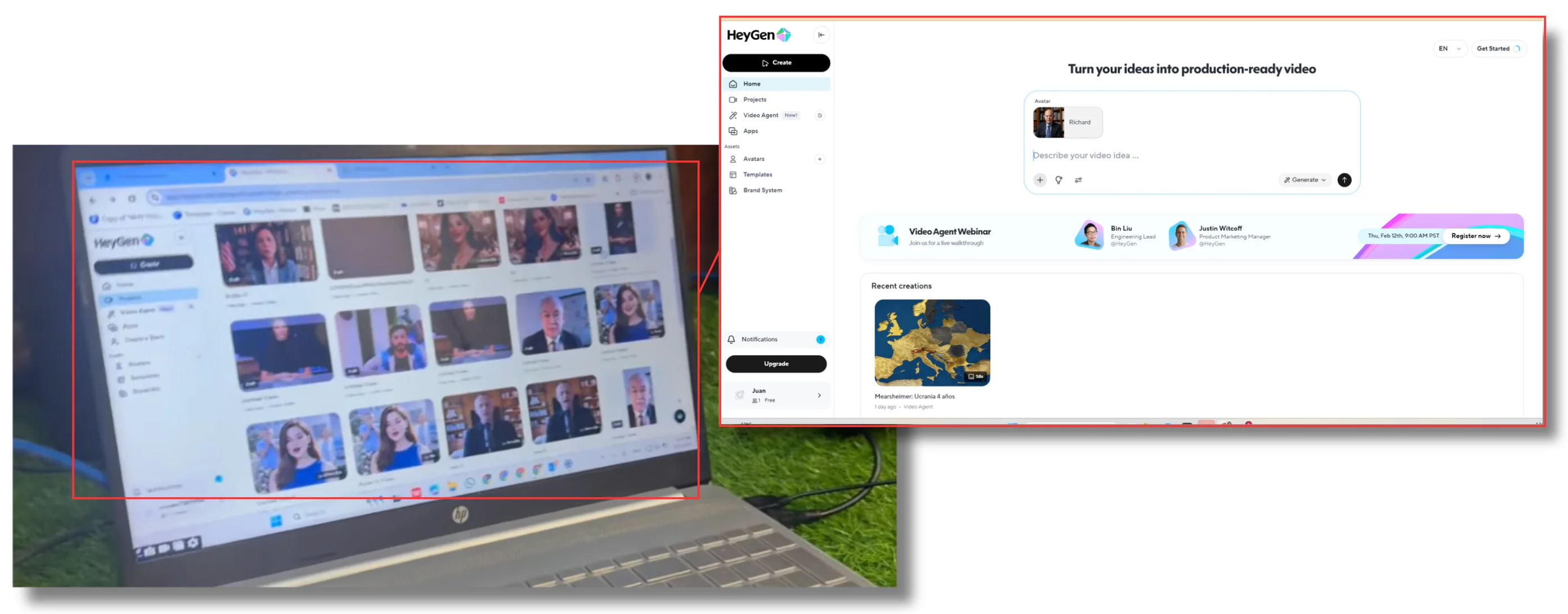

One of the videos posted by this network shows a computer screen clearly displaying HeyGen, an AI-powered video generator, as one of the tools used to create this content. These images show that they have used this platform to generate deepfakes of Mearsheimer, as well as other people.

At Maldita.es, we tested it out: we asked this same tool to create a video of John Mearsheimer in Spanish analyzing the situation in Ukraine four years after the Russian invasion. In a few minutes, HeyGen generated a video similar to those published by this network of YouTube channels. In fact, Mearsheimer himself appears in its avatar gallery, under another name, as one of the options for creating content. The result can be further refined with a paid subscription to the platform. In fact, the tool gives you the option to “clone” a real person, although in Mearsheimer's case, it was enough to just type in his name because he is already in the platform's archive.

Less than half of the channels analyzed (six) label their posts (and not all of them) as “altered or synthetic content”. This YouTube label, which appears in the detailed description of the post and temporarily when the video starts playing, is used to “keep viewers informed about the content they are watching”, according to the platform’s website. “Making it appear that someone gave advice that they did not actually give” or “cloning another person's voice to create voiceovers or dubbing” are two examples that should contain this label.

Maldita.es reported one of these channels to YouTube, which determined that it violated the Community Guidelines and removed it shortly after. “YouTube does not allow content that is intended to impersonate a person or channel,” the platform explains. To detect this type of content, they explain, they use “a combination of advanced technology and human review.”

“International analysis, especially when linked to conflicts, crises, or threats, generates immediate attention and a high volume of interaction”, explains Rafael González, a member of the Observatory on the Social and Ethical Impact of AI (OdiseIA) and maldito who has lent us his superpowers. The main objective of Mearsheimer's deepfakes, says the expert, is to act as a “lever for rapid growth within the platform's recommendation system”, taking advantage of the latest geopolitical movements in the world.

From international politics to videos about Pakistan: how some of these channels have radically changed the content of their posts

When we began this analysis, most of the posts on these channels (whose names include words such as “world,” “global,” “world,” “politics,” or “economics”) were fake political analyses by Mearsheimer. They used alarmist language, making viewers believe they were revealing something exclusive. But in several examples, the video description does not match its content.

For example, a video titled “BREAKING NEWS! JD Vance EXPOSES Donald Trump and ADMITS CHAOS at ICE” is actually about the Epstein case and does not mention either the U.S. Immigration and Customs Enforcement (ICE) or JD Vance, vice president in Donald Trump's administration.

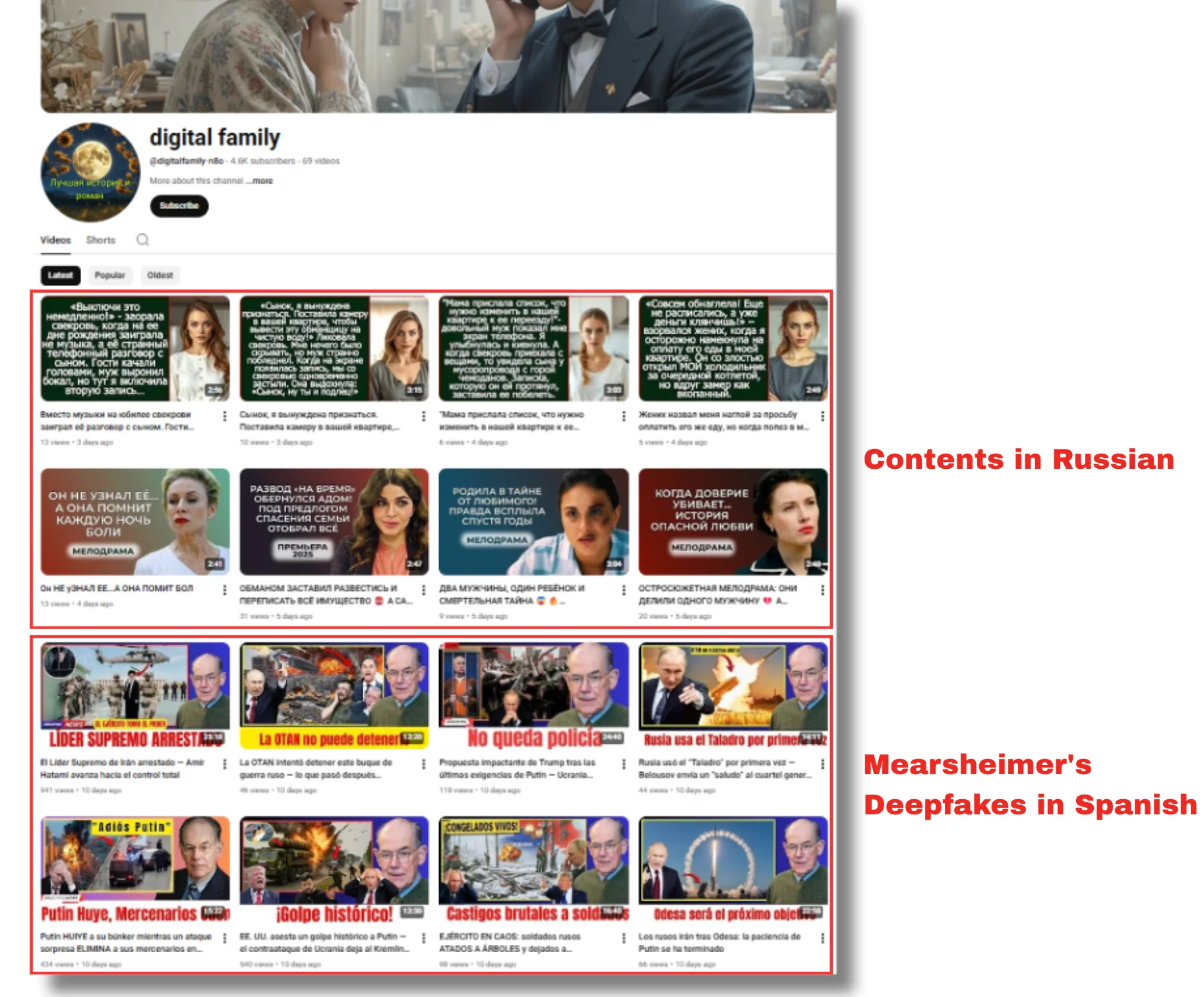

This type of content (which until now has been predominant) has given way to other videos with completely different themes, no apparent connection, and different formats. In the words of Rafael González, once the channel has gained visibility with this type of content, it is no longer necessary and becomes a risk. “Removing it and replacing it with unrelated videos allows the channel to be preserved as a digital asset while reducing the possibility of complaints or sanctions”, he says. At Maldita.es, we have verified that during this analysis, dozens of Mearsheimer deepfakes have been removed or replaced, which, according to the expert, were used to “activate the channel” and were never “the end of the operation”.

Recently, four of the channels analyzed began posting videos about Pakistan. “Rural life in Pakistan is harder than I thought” and “This is how people live far from cities in Pakistan” are two examples of the content they now publish. These videos are shorter (averaging six minutes, compared to Mearsheimer's videos, which are over 15 minutes long) and appear to be recorded spontaneously and with limited resources.

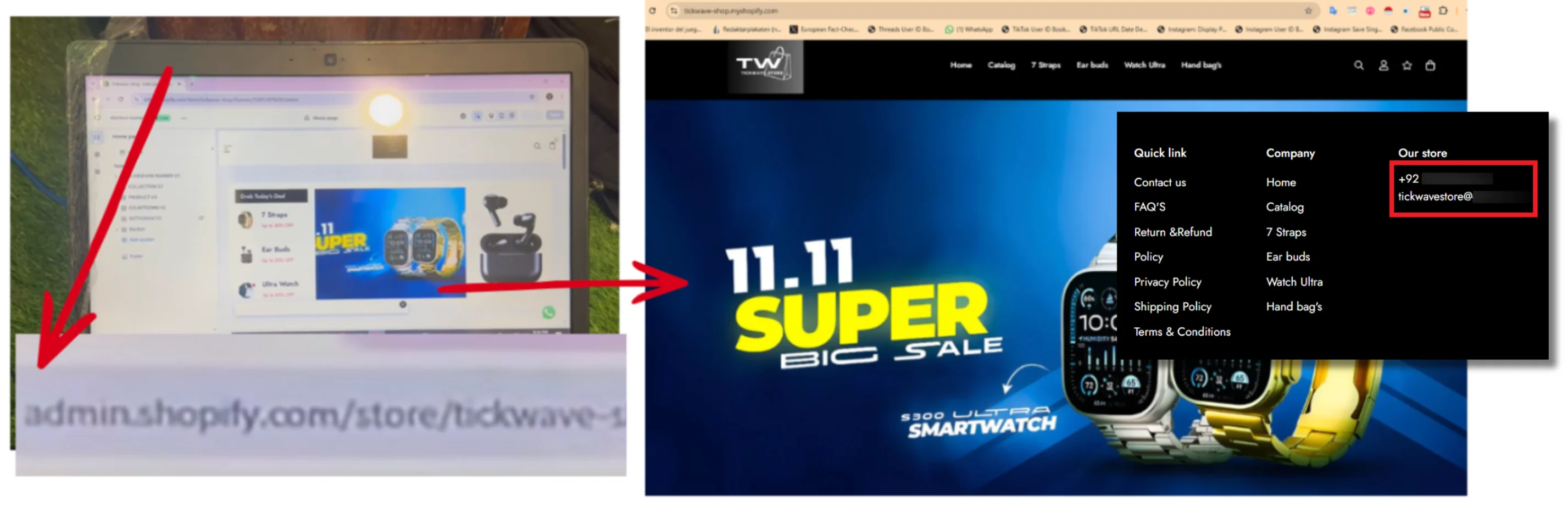

These videos, as well as other data, would indicate that they are operating from that country. On one of the computers, you can see how they edit a website on Shopify called tickwave, whose phone number has a Pakistani prefix (+92).

They have also changed the content of old posts. In several examples, the original link has been kept (along with other parameters such as views and publication date), but the content of the video has been completely changed. One example is the video now titled “This is What Life Without Luxury is Like in a Pakistani Village”, which was previously called “SURPRISE Explosions Rock Nuclear Site in Iran... Regime COLLAPSES”. It was published on January 31, 2026, and went from showing a deepfake of Mearsheimer in Spanish to a video of several men in a field.

Another profile (recently removed from the platform) stopped posting deepfakes in Spanish of the political analyst to start a new series of videos in Russian. It posted at least eight of these videos, each less than four minutes long. According to the titles of the videos, they told stories such as a birthday party where, instead of music, a telephone conversation between a mother and her son is played, or a woman who secretly places a surveillance camera in her son's apartment to uncover deception.

The experts consulted agree that the ultimate goal of these channels could be to monetize them. Tomás Arriaga, an expert in digital marketing specializing in monetization and social media performance, explains that, based on the videos on this network (which he identifies as a “strange mix”), their goal may be to “learn how to make money on the internet”. Not only through YouTube. As seen in some of the videos about Pakistan, they also use tools such as Shopify (an e-commerce platform), Arriaga points out.

As of February 11, 2026, the network of channels analyzed here has not been monetized. However, as Rafael González explains, “the absence of visible monetization does not imply an absence of economic or strategic incentive”. The expert says that these channels appear to be in “a phase of value accumulation, in which what matters is not immediate income but the construction of a reusable asset”. In addition, he asserts that keeping the channel unmonetized “reduces visibility to the platform's control systems and allows it to operate longer without interference”.

Several political analysts have been denouncing impersonation in YouTube videos for months

Mearsheimer is not the only expert who has been the subject of deepfakes on YouTube. On this same network of channels, we have found dozens of videos in Spanish featuring American fund manager and investor Ray Dalio. On a smaller scale, videos have been posted of other personalities such as Jack Smith, former Special Counsel for the US Department of Justice; Piers Morgan, British journalist and long-time victim of deepfakes; and Norbey Marin, Venezuelan clinical psychologist and youtuber, among others. All of them also show signs of having been generated with some kind of artificial intelligence tool. As in the examples featuring Mearsheimer, neither the expert's movements nor his voice sound natural.

In January 2026, Yanis Varoufakis, economist and former Greek minister, reported in The Guardian that his image was being used to discuss current political issues, such as the situation in Venezuela. “They say things I might have said, sometimes mixed in with things I would never say”, he explained in the British newspaper. His first impulse, he says, was to try to get the platforms (including YouTube) to remove them, but he gave up: “No matter what I did, no matter how many hours I spent each day trying to get the big tech companies to remove my AI doubles, many more would spring up again”.

In December 2025, American historian Victor Davis Hanson said he was one of those affected. In a podcast on The Daily Signal, he explained that he had found posts that falsely used his image, voice, and surroundings (copying the studio where the video was recorded) to promote ideas that he had “never expressed before” and that he “does not share”. According to Hanson, these channels “want to use someone who may have a higher profile” to spread their ideas. That same month, several social media users also reported the existence of a YouTube channel featuring deepfakes of American economist Jeffrey Sachs.

“International analysts are particularly well-suited to this type of impersonation”, explains Rafael González. They are people with abundant public audiovisual material, “which facilitates voice and image cloning”, and they have a recognizable and structured discourse “that allows plausible messages to be generated using language models”, he says.

The increasing popularity of this type of content, according to the expert, “is due to a combination of the growing accessibility of AI tools and the difficulty platforms have in responding quickly to abuses that do not always clearly cross regulatory boundaries”.

* This article was updated on February 13, 2026 to add YouTube's statements.

This article was written with the help of the superpowers of Tomás Arriaga, an expert in digital marketing, and Rafael González, a member of the Observatory on the Social and Ethical Impact of AI (OdiseIA) who lent us his superpowers.

Thanks to your superpowers, knowledge, and experience, we can fight harder and better against lies. The Maldita.es community is essential to stopping misinformation. Help us in this battle: send us any hoaxes you come across to our WhatsApp service, lend us your superpowers, spread our debunkings, and become an Ambassador.