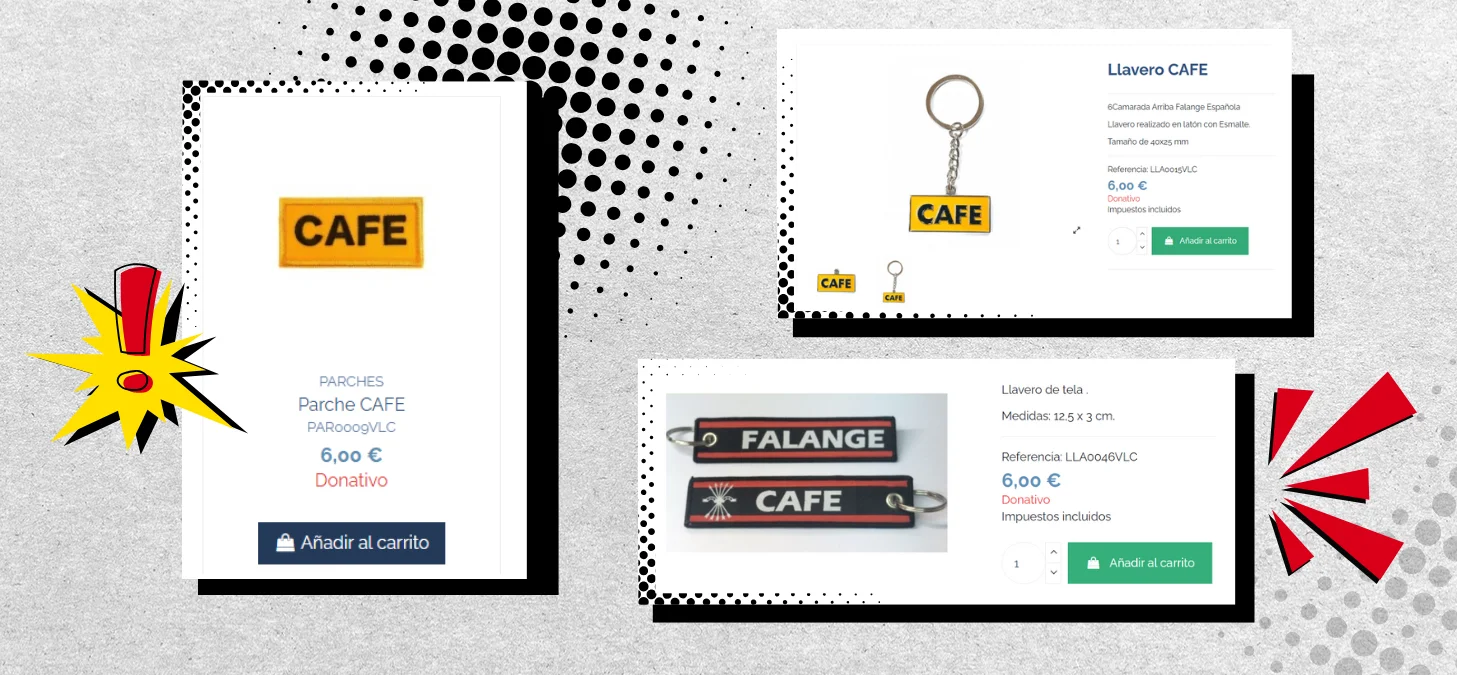

When most people read the word “CAFE,” they probably think of the bitter drink served in a cup. Yet in the coded language used by those seeking to evade platform restrictions and glorify dictators or their regimes, it has a very different meaning. The letters stand for “Camarada Arriba Falange Española” (“Comrade, Up with the Spanish Phalanx”) and are used as a covert way of showing support for Francoism. Like this one, there are other terms used to refer to Adolf Hitler, Benito Mussolini, and Nazism. Such coded terms are just one of the strategies used to evade platform restrictions and spread disinformation and conspiracy theories. Another is the use of memes to spread extremist ideas disguised as humour and reach a younger audience.

These tactics pose a major challenge for platforms. Many have rules banning hate speech or the glorification of violent extremist organisations, but both academic research and experts interviewed by Maldita.es acknowledge that this type of content is often difficult for social networks to identify and moderate. Some of the recommendations mentioned by experts, in addition to content regulation, include providing links to external pages with reliable information that users can verify when searching for content that may be related to Holocaust denial or other conspiracy theories, or training artificial intelligence processes to more automatically detect inappropriate content, although automated processes have their risks.

‘Pop Fascism’: rewriting history in the digital age

This is the third article of an international investigation carried out by Maldita.es (España) and Facta (Italy). The project explores how, through the use of contemporary social media features, fascist discourse infiltrates our daily lives in three stages: normalisation, acceptance and idolisation.

This research was made possible with the support of Journalismfund Europe

Terms, hashtags and emojis: how evade platform rules

UNESCO has been studying hidden language for years, such as the coded words used to deny the Holocaust, and urges platforms to act “to break the cycle of algorithms that actively amplify content inciting hatred,” the institution told Maldita.es. “The difficulty in keeping up with such keywords lies in the fact that they are based on a constantly evolving lexical and ideological repertoire,” UNESCO added.

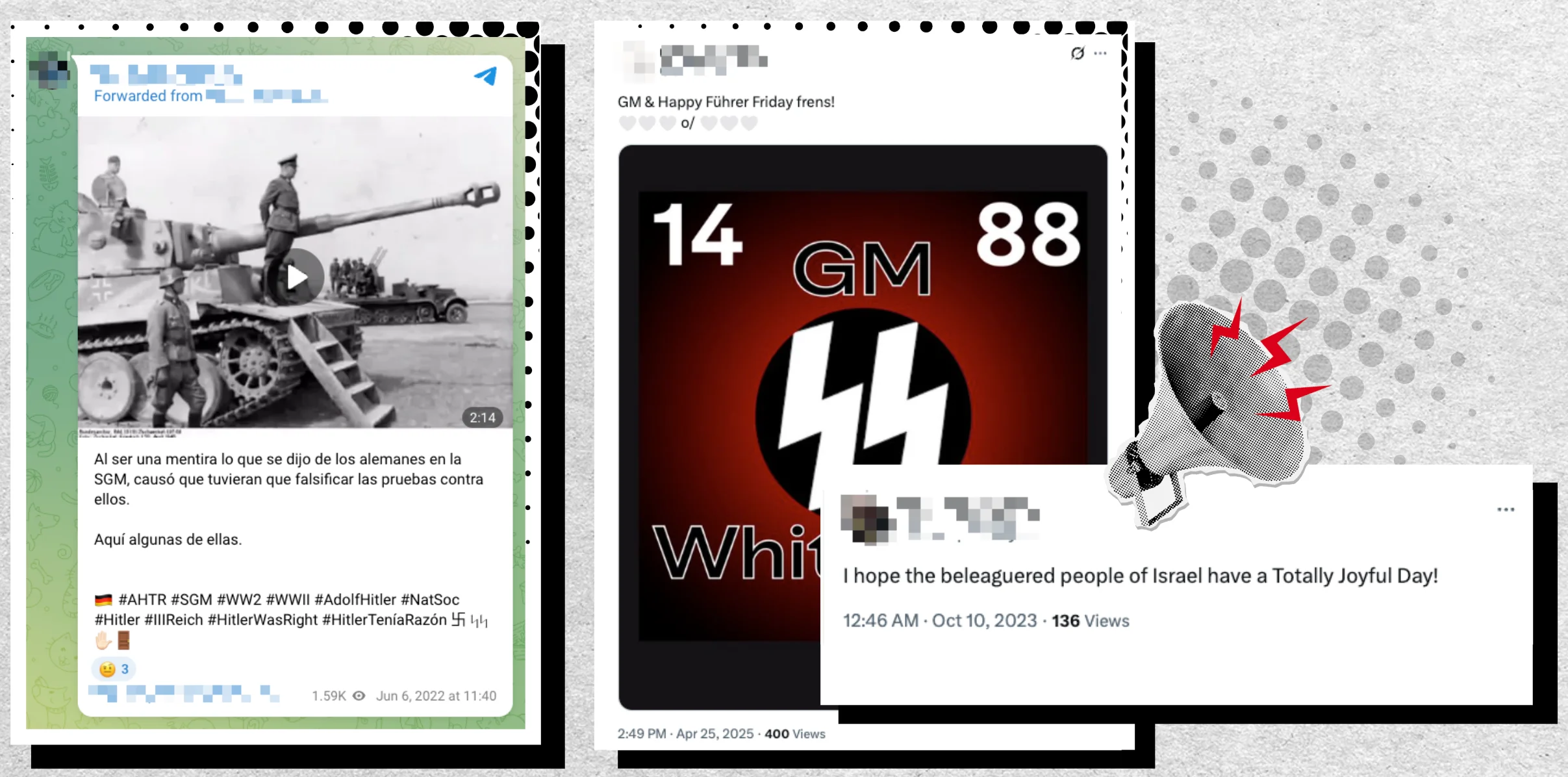

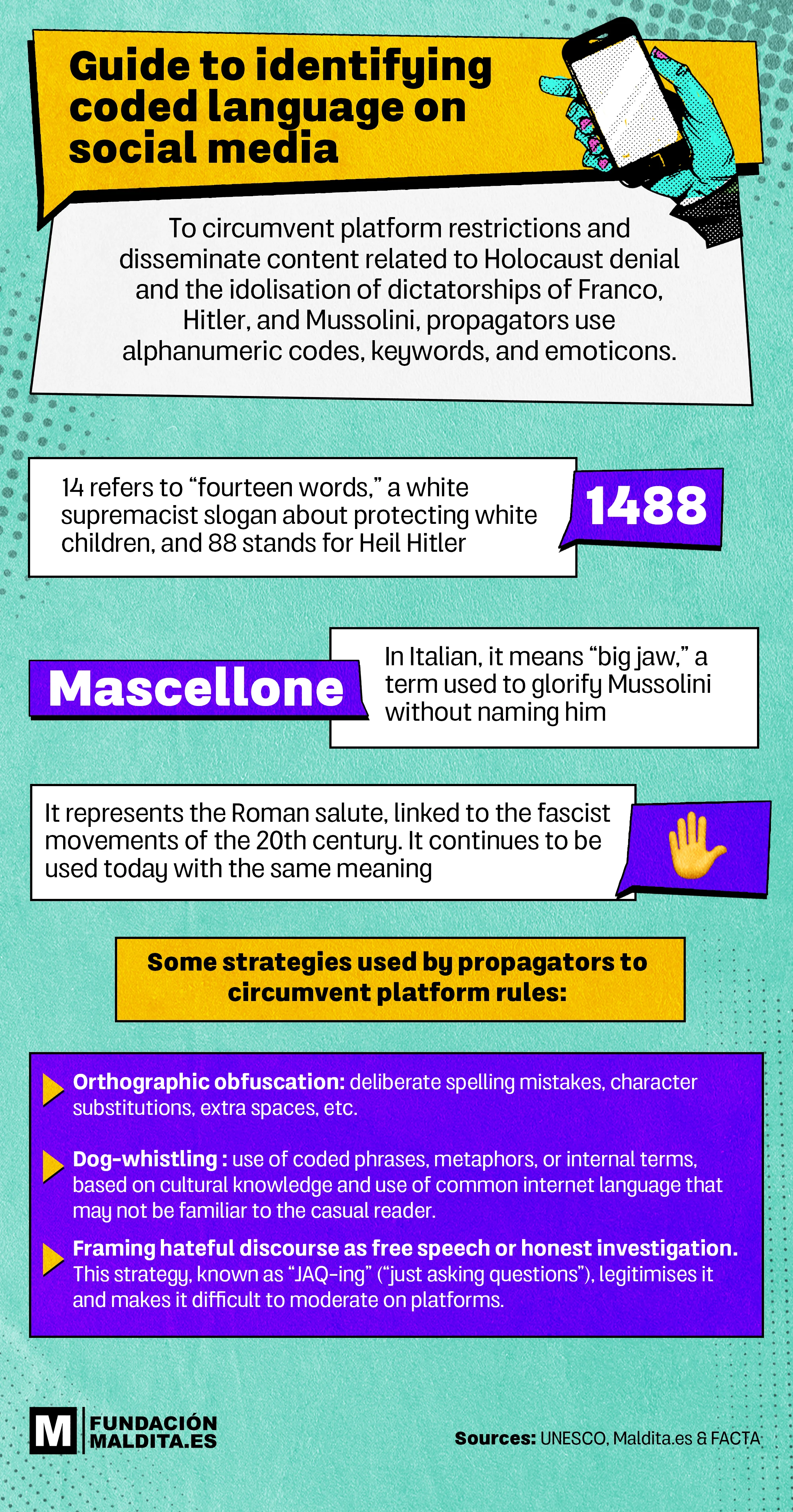

In the considered “antisemitic” discourse (prejudice or hatred against Jewish people, the foundation of the Holocaust) disseminated using coded words, numbers such as 1488 are used. The figure combines two white-supremacist symbols: the “Fourteen Words” slogan about protecting white children, and 88, shorthand for Heil Hitler (H being the eighth letter of the alphabet). Other phrases include “Have a totally joyful day,” an apparently benign wish whose English acronym TJD stands for “Total Jewish Death.”

To deny the existence of the Holocaust, despite overwhelming documented evidence of its existence, terms such as “Holocuento” (“Holo-story”), “Holoengaño” (“Holo-hoax”), or “Ana Fraude” to refer to Anne Frank, the Jewish girl victim of the Holocaust known for having kept a diary during the two years she spent hiding in Amsterdam, are used. UNESCO notes that Holocaust denial began even before the end of the Second World War “when the perpetrators of the genocide sought to conceal or obscure their crimes,” and therefore emphasises that “it is importante to understand the rhetorical resources and strategies employed in Holocaust-denial discourse” in order to decode them.

There are also numerous ways of referring to Adolf Hitler without naming him directly: Some of these include “Austrian painter” (it is said that the Führer wanted to dedicate himself to painting, but was rejected), “the mustachioed one,” “Uncle A,” or his initials AH. Furthermore, the hashtag #AHTR stands for “Adolf Hitler was right.”

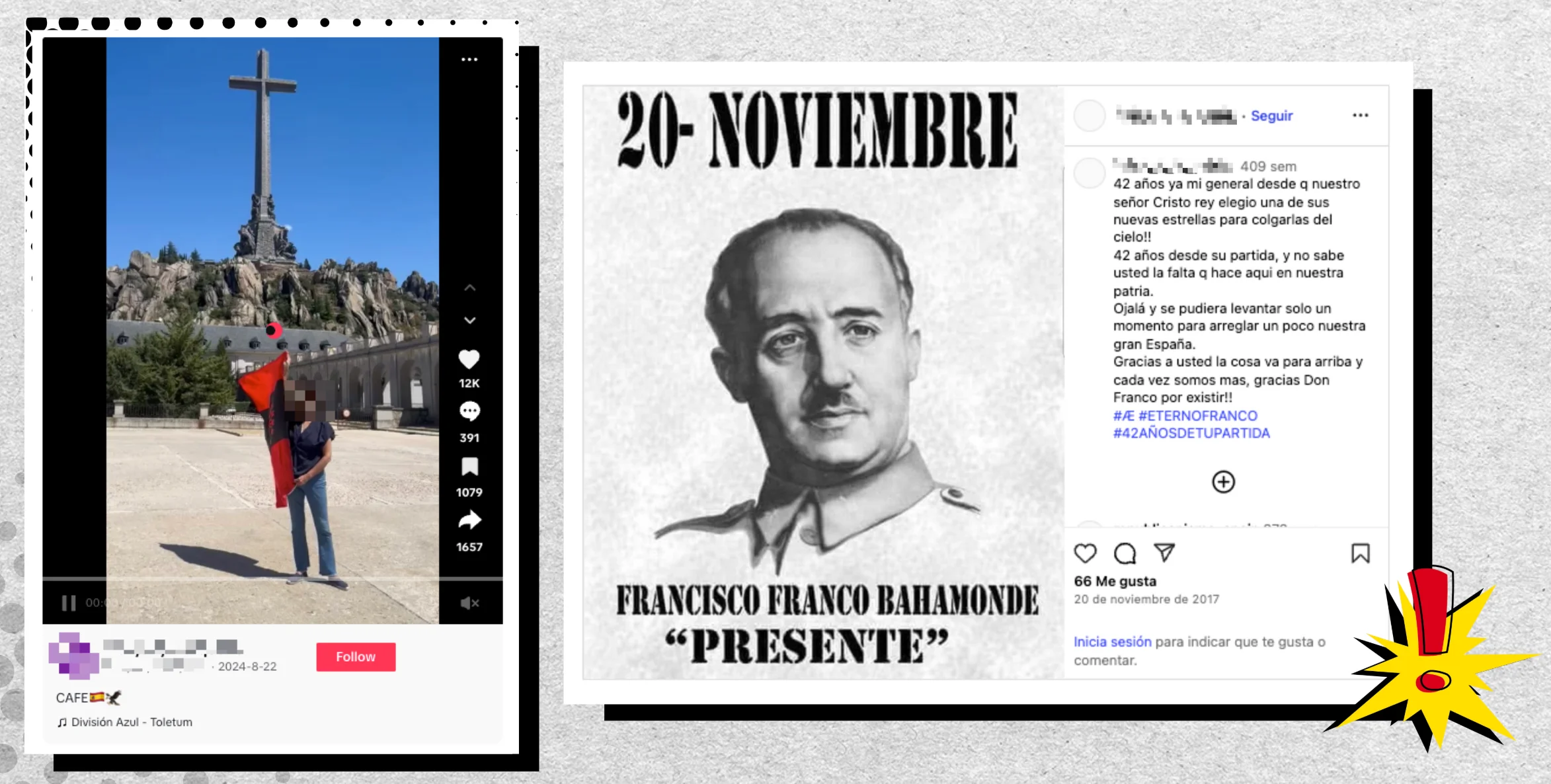

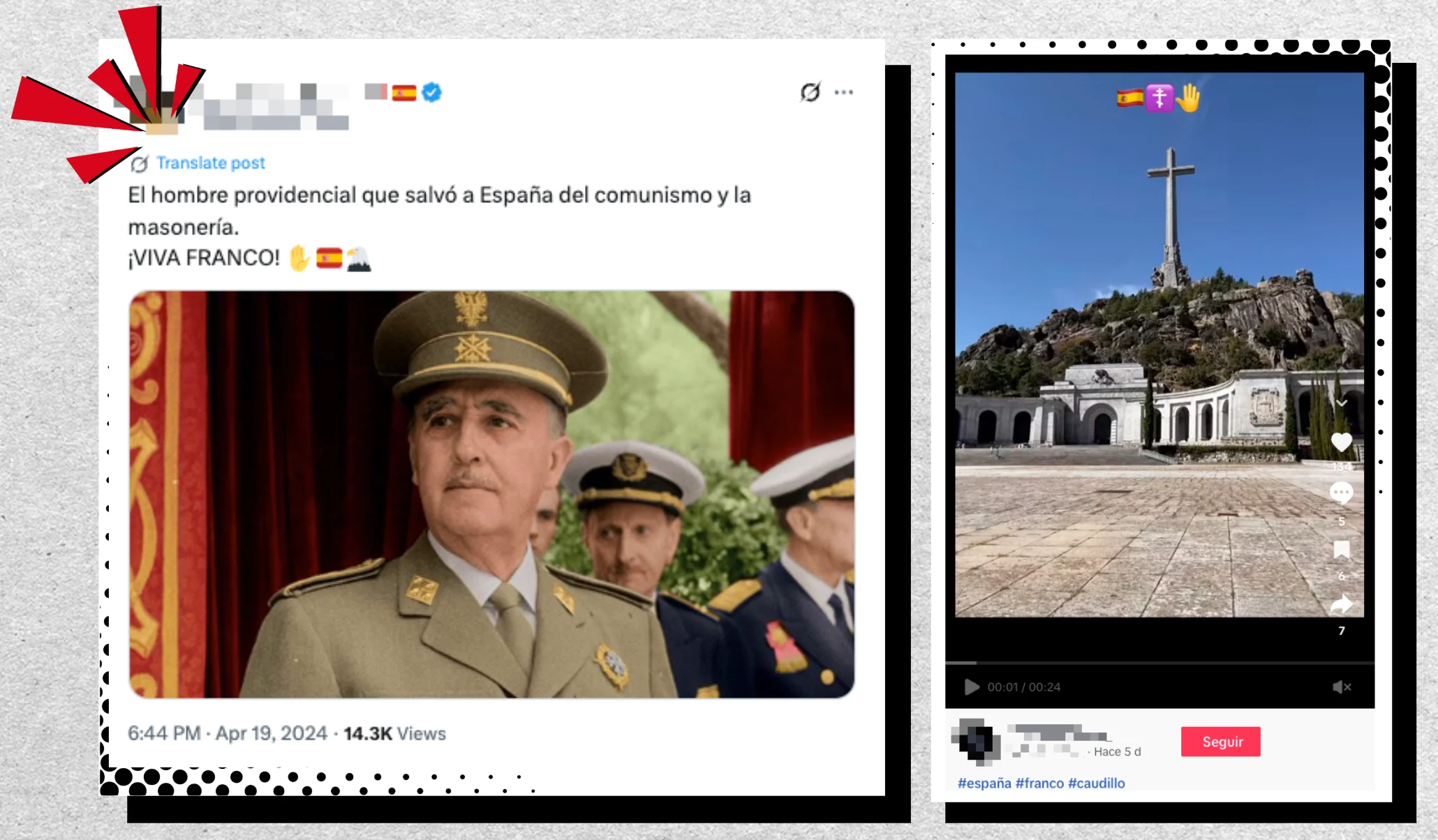

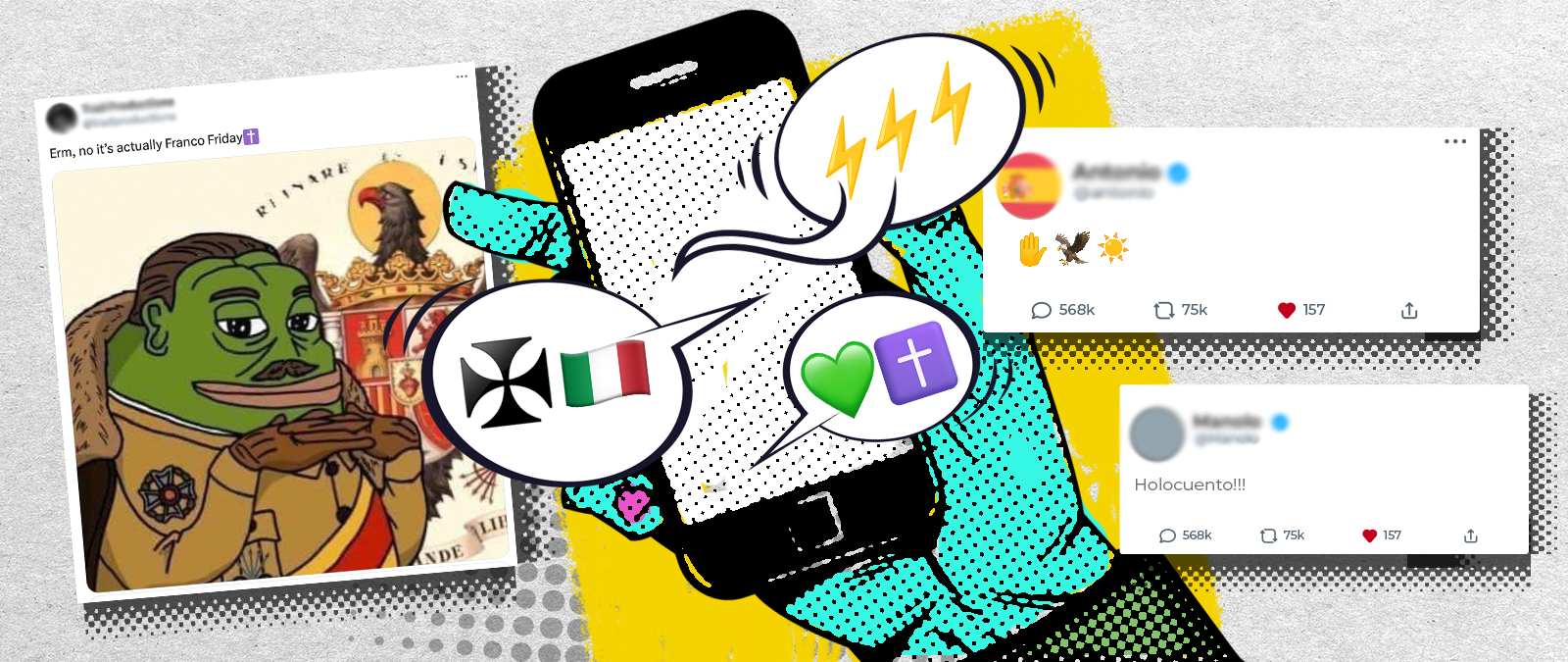

In Spain, coded terms such as CAFE (a Falangist slogan attributed to General Gonzalo Queipo de Llano that was used to say that everyone on the opposing side had to be shot), abbreviations like Æ (a term that translate as “Arriba España”, “Up with Spain”, a Falangist cry), or FF, which refers to Franco Fridays (an expression of unclear origin that is used to commemorate Francisco Franco’s figure once a week) are common examples.

Similarly, in Italy, Benito Mussolini is known by a range of nicknames that are replicated on social media to refer to Il Duce. For example, Mascellone (“big jaw”), Pelatone (“bald head”), Crapa pelada (“bald head” in Lombard dialect) or Gran Babbo (“big daddy”), among others.

The use of these terms is not confined to online spaces. Some people even profit from it. In Spain, it only takes a few clicks to find stores selling keychains, patches, stickers or pins emblazoned with the acronym CAFE for less than ten euros.

Beyond alphanumeric codes or cryptic words, emojis are also used. Within antisemitic discourse, Jews are referred to as animals — 🐀 (rat), 🐍 (snake), 🐷 (pig), or 🐙 (octopus). Another animal used to allude to Nazism or Francoism without showing prohibited imagery by platforms, is the eagle (🦅), featured on Spain’s flag during the dictatorship and alongside the swastika (known as the Reichsadler or German Imperial eagle). The raised hand (✋) emoji symbolises the Roman salute, associated with fascist movements, while double or triple lightning bolts (⚡️⚡️⚡️) are used to allude the SS, the organisation founded by Hitler in 1925 to protect Nazi Party leaders and which played a very important role in the Holocaust. Other examples include the runic ᛋᛋ symbol, representing the SS insignia, and the swastika (卐), the hooked cross adopted as the Nazi Party emblem in 1920.

Harmless to outsiders, loaded with meaning for those who can decode them

These tactics can be summed up by the concept of a “dog whistle.” Like the real-life whistle used to train dogs with ultrasonic sounds that are inaudible (or barely audible) to humans, this tactic aims to communicate with a relatively restricted group of followers using a code of meanings understood only within that inner group, but practically incomprehensible to outsiders, except in very superficial ways. The term thus refers to coded messages that operate on two levels, political and linguistic: harmless and vague to those who don't know their true meaning, extremely loaded with meaning for those capable of decoding them.

As UNESCO explains to Maldita.es, there are several additional tactics used to evade platform regulation, such as spelling obfuscation (for example, deliberate misspellings, character substitutions, homographs, extra spaces or punctuation) and signposting, (which refers to relatively benign posts that include links to marginal platforms where the lack of moderation allows for more radical and explicit content). There is also multimodality, that is, the combination of different formats or codes in the same content (for example, embedding coded words in an apparently innocent video, which makes automatic detection harder); or reframing, which “consists in presenting hate speech as freedom of expression or honest inquiry,” according to the institution.

“This strategy, sometimes described by the acronym ‘JAQ-ing’ (Just Asking Questions), doesn’t violate freedom of expression per se, but rather exploits its protections to create ‘plausible deniability’ and blur intent, producing a grey zone where moderation systems are less effective,” UNESCO adds.

The use of such tactics serves as a way for like-minded individuals to recognise each other. Hidden language, often difficult to identify for outsiders, functions as a sort of password: is an easy way for those who identify it to find each other and express their shared beliefs. However, these codes can also reach broader audiences, who may end up unwittingly sharing it with the same potentially harmful effects. Thus, these elements adapt and migrate quickly between platforms, slipping extremist messages into everyday conversations because at first glance they “seem harmless.”

Memes: a tool to disguise extremist messages as ‘humour’

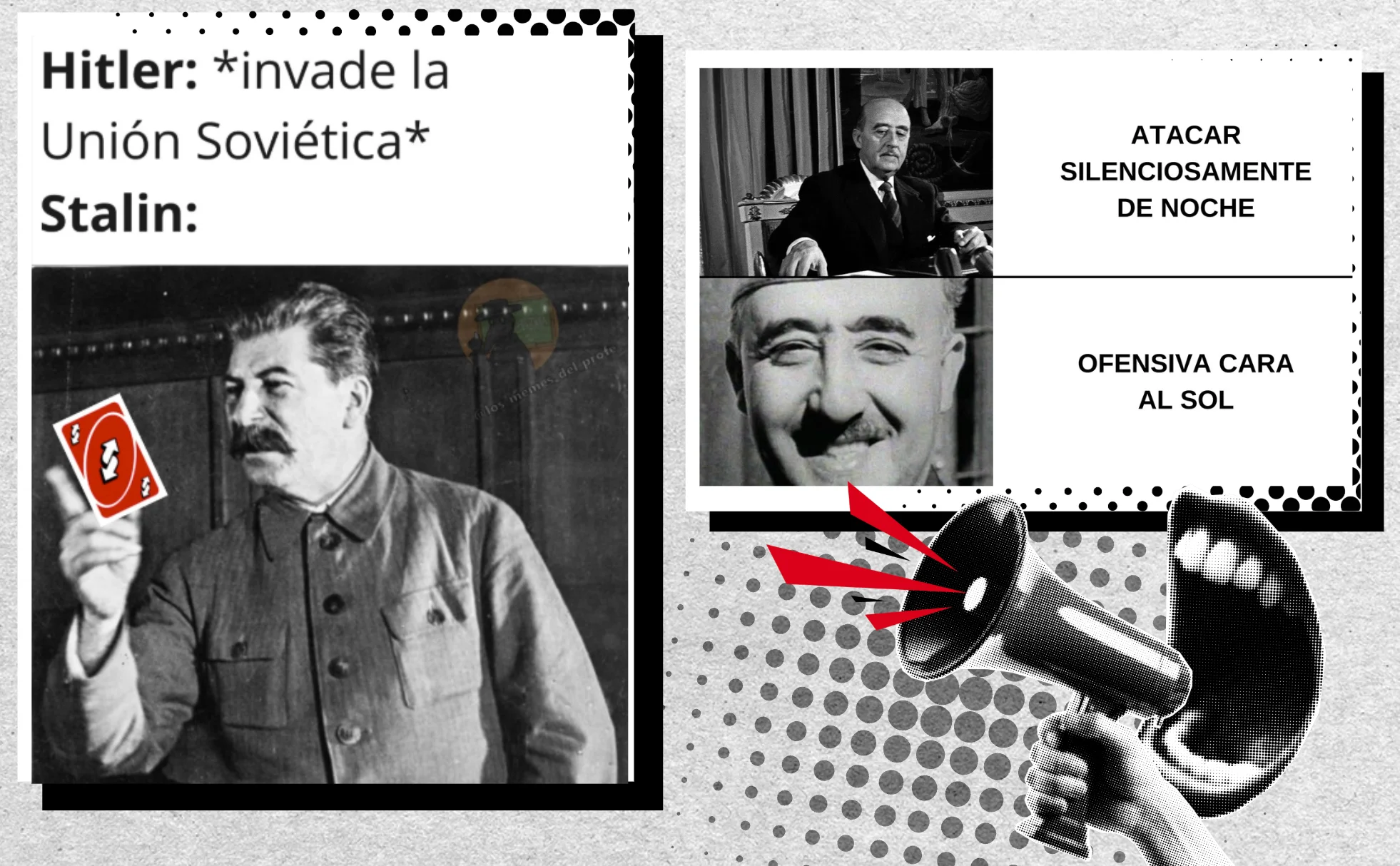

Online propaganda has learned to use memes as building blocks of a broader discourse, exploiting pop culture “to communicate messages of hate without the risk of exposure to criticism,” explains the Dutch Ministry of Justice and Security’s study Far right memes: undermining and far for recognizable. In this way, such content reaches young audiences, for whom “the simplified message of memes can spark curiosity” about more extreme ideas or materials.

Before spreading widely, these memes often circulate first in private groups or forums, but this is done in a supposedly humorous and subtly coded way. This gives users distance and deniability, since they can always claim they were “just joking,” the study notes.

For Richard Kuchta, senior analyst at the Institute for Strategic Dialogue (ISD), it's important to distinguish when humor is actually involved and what the purpose of that supposed humor is: “Is the humour really to spread the ideology or is the target to make fun of the ideology and ridicule it? Which are like two completely different things.”

The meme-and-“humour” strategy has been explicitly promoted by figures such as Andrew Anglin, a U.S. neo-Nazi who in 2013 founded the website The Daily Stormer (its title a tribute to the Nazi tabloid Der Stürmer). In a Understanding the Alt-Right. Ideologues, ‘Lulz’ and Hiding in Plain Sight, Anglin wrote openly that “When using racial slurs, it should come across as half-joking, like a racist joke that everyone laughs at because it’s true. This follows the generally light tone of the site. It should not come across as genuine raging vitriol. That is a turnoff to the overwhelming majority of people.”

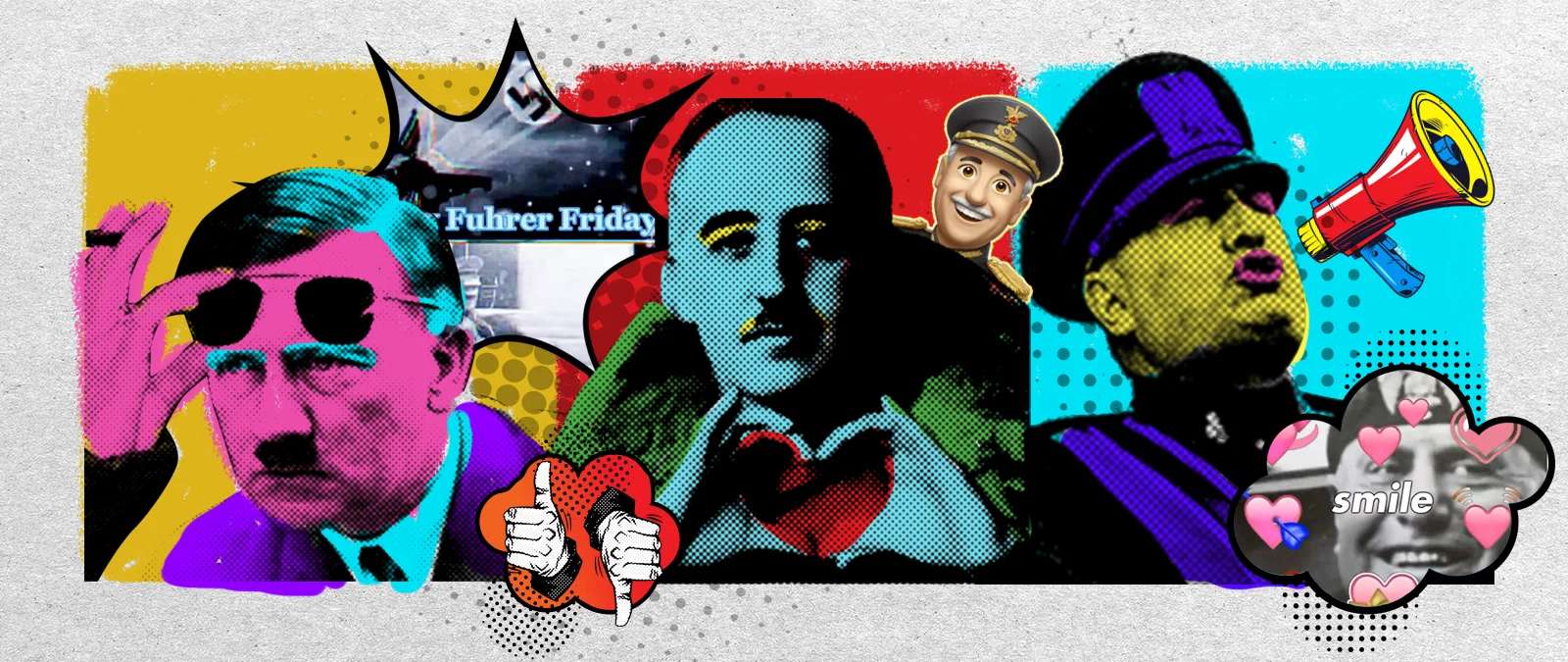

The most iconic expression of this philosophy was the meme Pepe the Frog, an anthropomorphic cartoon frog that for years became the mascot of the U.S. far right. Originally born as the protagonist of a comic strip, Pepe first became a harmless meme, but around 2015, images of the frog in an SS uniform began circulating, associated with Nazi symbols and accompanied by antisemitic and Holocaust denial slogans. Over time, the meme became inextricably linked to Donald Trump, who in 2016 retweeted an illustration of himself depicted as Pepe.

It’s difficult to measure the direct effects of a single meme, says the analysis Memes as an online weapon; however, they can contribute to “a series of indirect or gradual effects,” such as the normalization of certain positions, the formation of groups and identities, and the inspiration for extremist actions, it adds.

Since 2007, the website knowyourmeme.com has catalogued and explained the most viral memes, making them accessible to the public. A keyword search there can help users understand the meanings behind these images and avoid falling victim to disinformation. For example, it explains how the famous Pepe the Frog meme was first used by singer Katy Perry in 2014 to joke about jet-lag exhaustion; by Donald Trump in 2015, before becoming U.S. president, where Pepe appears as Trump himself standing at a podium with the presidential seal; and by the Russian Embassy in the UK in 2017, which posted the meme in response to a meeting between the country's then Prime Minister, Theresa May, and Trump, who had just been elected President of the United States.

Access to reliable information sources: a platform-based solution to counter the spread of this content

In general, social platforms’ community guidelines do not explicitly prohibit this kind of coded or ambiguous content, but they do claim to restrict posts that promote hate speech (see, for example, Instagram or TikTok). In practice, this means they do not remove content about Hitler when it is historical or educational, but they state that they will delete posts that glorify antisemitism or Nazi ideology.

The study Digital Dog Whistles: The New Online Language of Extremism states that processes for identifying content on social media “are not sufficiently effective to preemptively prevent the spread of hate content,” so platforms “continue to rely on manual reporting to remove content already uploaded.” Moderation efforts are even harder in the case of memes because “they are presented in an ambiguous or coded manner,” according to the analysis Memes as an online weapon. “Even in cases where ambiguity and humor are far from obvious, protecting users remains difficult because intentions and effects are hard to prove,” the report notes.

A 2020 analysis by the Institute for Strategic Dialogue (ISD) found that Facebook’s algorithm (owned by Meta) actively promoted Holocaust-denial content. When users typed “Holocaust” into Facebook’s search bar, denialist pages appeared, and the ISD identified at least 36 Facebook groups, with a combined following of over 366,000 users, that denied the Jewish genocide. Another ISD report found that TikTok hosted hundreds of accounts openly supporting Nazism and using the app to promote its ideology and propaganda. The study added that TikTok was not “removing such accounts effectively or promptly,” and that by the time deletions occurred, “weeks or months had passed and the content had already reached a large audience.”

At Maldita.es we also reported how Grok, X’s AI system, has shared antisemitic content, generating racist, xenophobic and Nazi-related images featuring famous athletes, increasing likelihood of misleading content being created or of content being taken out of context and shared on this social network and other digital platforms, which could generate disinformation about real people.

We also found that manipulated videos like those of Aitana and Quevedo singing Cara al Sol, which could also lead to disinformation, are still active on TikTok two years after being published, without any warning about their falsehood. The same occurs with a video showing bees being gassed at a streetlamp using the hashtag #extermination, set to the Erika March (a Nazi-associated military song from the Third Reich), also remains online. This is despite TikTok’s own policies stating that “coded messages used by hate groups to communicate without appearing hateful, such as keywords, symbols or sound trends,” violate platform rules. For Richard Kuchta (ISD), “there are always alternative ways and workarounds that extremists very often use.” He adds: “It's like a cat-and-mouse game: the platform or regulators discover a problem, create some kind of mitigation, and bad actors or extremists actors find ways to circumvent these rules or the new mitigations adopted by the platforms.”

Thus, he adds that “whenever there is clearly illegal content, platforms are required to manage and remove it.” Under the Digital Services Act (DSA), “once they are informed that they are hosting illegal content, they are becoming liable for hosting the content”. Therefore, he explains, what typically happens in “clearly illegal” cases is that the content is removed, insisting that “this does not affect freedom of expression, since we are talking about clearly illegal content.” The European regulation establishes measures that cover the removal (Art. 7) of content that is illegal. That is, posts that share illegal content (such as child pornography, terrorist content, hate speech, the sale of illegal products, etc.).

One of the recommendations of studies such as Digital Dog Whistles: The New Online Language of Extremism is to train artificial intelligence processes “using manual researchers to label hateful content and provide a broader dataset against which to compare all uploaded content.” This could detect inappropriate posts that violate the platforms' community standards, although automated content moderation processes have also created problems in the past. They also advise “raising awareness of hateful content among users and encouraging or incentivizing its reporting, as this could significantly contribute to existing removal mechanisms.”

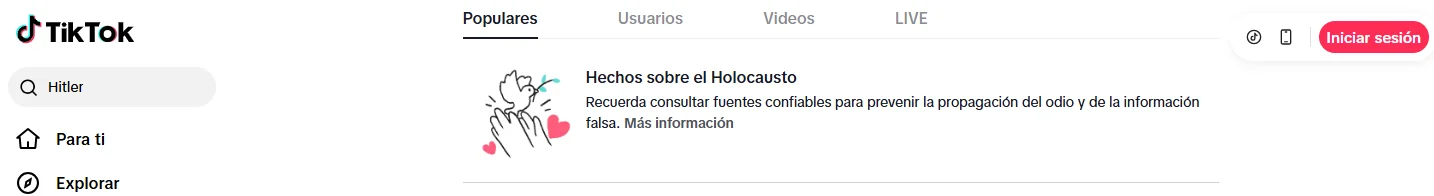

UNESCO, in its study on Holocaust denial on social media, suggests adding “verification labels that redirect users to accurate and reliable content.” TikTok, for example, states that it does not allow content that denies or downplays “well-documented historical events that have caused harm to protected groups,” such as denying the existence of the Holocaust. In fact, when you search for “Hitler” or “Nazi” in the platform's search engine, a message appears saying: “Remember to consult reliable sources to prevent the spread of hate and false information”, accompanied by a link to a “Facts About the Holocaust” website.

The same doesn't apply when searching for “Francisco Franco” or “Francoism”, where content with the dictator's image is shared (some even with thousands of views). Kye Allen, Doctor of International Relations and researcher at the University of Oxford, asserts that one limitation in content moderation is that the same identification and moderation processes are not applied consistently across different languages.

Another example: if we search for “Kalergi Plan,”the conspiracy theory against the European Union that refers to a plot by international elites to wipe out the “white race,” TikTok offers no results, but instead displays a message: “This phrase could be associated with hateful behavior.” But when the same term is searched in Spanish, reversing the order of the words “Plan Kalergi,” multiple videos discussing the theory appear.

Another UNESCO recommendation is to invest in training for content moderators on topics such as Holocaust denial and distortion, and antisemitism. Allen agrees that moderators on platforms like TikTok “may not always be aware of some very specific historical references”. One example, according to the doctor and researcher at the University of Oxford, content commemorating the Blue Division, the Spaniards who fought alongside Hitler during World War II, may go unnoticed by moderators, he explains.

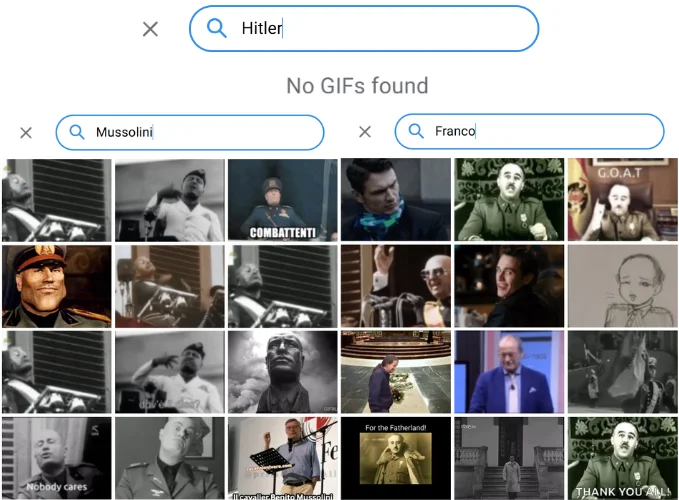

Maldita.es has found that, as a general rule, platforms impose stricter restrictions on content referencing Hitler or Nazism than on posts about other dictators or extremist movements. For example, in Telegram, GIFs (an image format used to create short, looping animations from a sequence of frames) of Franco and Mussolini are allowed, but not Hitler, for whom there are no results.

Methodology

This text is part of a cross-border research project conducted by Maldita.es(Spain) and Facta(Italy) between June and September 2025. During this period, the focus was on collecting content from six platforms (Facebook, Instagram, Telegram, TikTok, X and YouTube), as well as from websites. In total, more than 500 pieces of content were recorded, from which we gathered various types of information such as: user, date of publication, format, language, and impact, among others. Only posts glorifying Francisco Franco, Benito Mussolini or Adolf Hitler, or the measures and policies implemented during their respective dictatorships, or associated symbolism were selected. These contents were analysed individually. From this analysis, the most frequently repeated terms and emojis were extracted, in order to then study their possible hidden meaning within this movement. Various academic studies and expert publications were consulted to better understand their meaning.

If you have any questions, please contact us at [email protected].

Memes, football and songs: how ‘Pop Fascism’ paves the way for disinformation

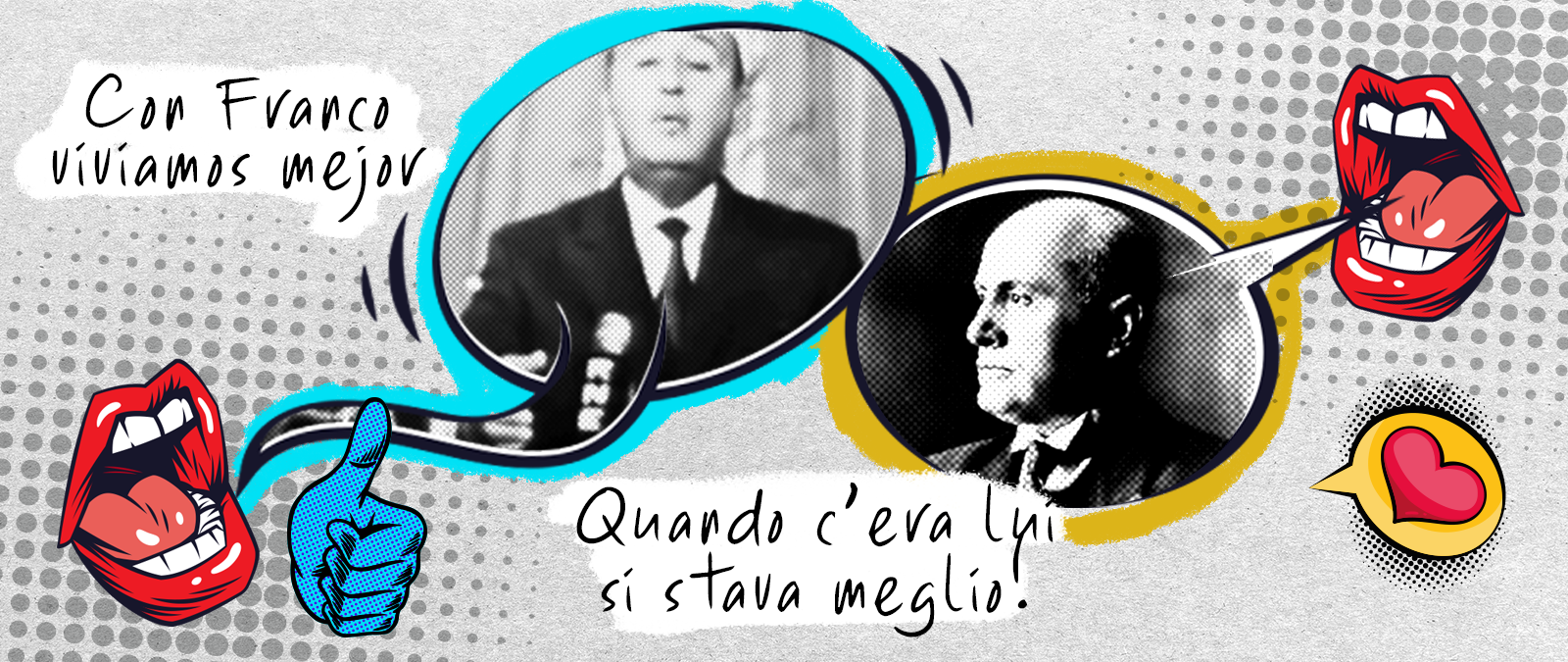

Memes, football and songs: how ‘Pop Fascism’ paves the way for disinformation “We lived better under Franco”: the narrative that triumphs on social media and seeks to rewrite history through disinformation and other manipulation strategies

“We lived better under Franco”: the narrative that triumphs on social media and seeks to rewrite history through disinformation and other manipulation strategies